Simo SärkkäArno Solin

Arno Solin

Associate Professor in Machine Learning

Academy of Finland Research Fellow

ELLIS Scholar

Aalto University

ELLIS Institute Finland

Biography

Dr. Arno Solin is an Associate Professor (with tenure) in Machine Learning and Academy of Finland Research Fellow at the Department of Computer Science at Aalto University, Finland. He is also an ELLIS Scholar and holds an Adjunct Professorship (Title of Docent) at Tampere University, serves as a member of the Young Academy Finland, and is the coordinating professor of the ‘Next-generation Data-efficient Deep Learning’ program of the Finnish Center of Artificial Intelligence (FCAI). His research interests are in data-efficient machine learning, with a special interest in probabilistic methods for real-time inference and sensor fusion.

At Aalto, Arno leads a research group in machine learning. He is an Editorial Board Reviewer for JMLR and a Senior Area Chair for NeurIPS and AISTATS. He gave a tutorial on Machine Learning with Signal Processing at ICML 2020. He is a winner of the ISIF 2018 Jean-Pierre Le Cadre Best Paper Award, and he won the MLSP 2014 Schizophrenia Classification Challenge on Kaggle. Spectacular AI, a sensor fusion and spatial AI start-up, is a spin-off from his research group.

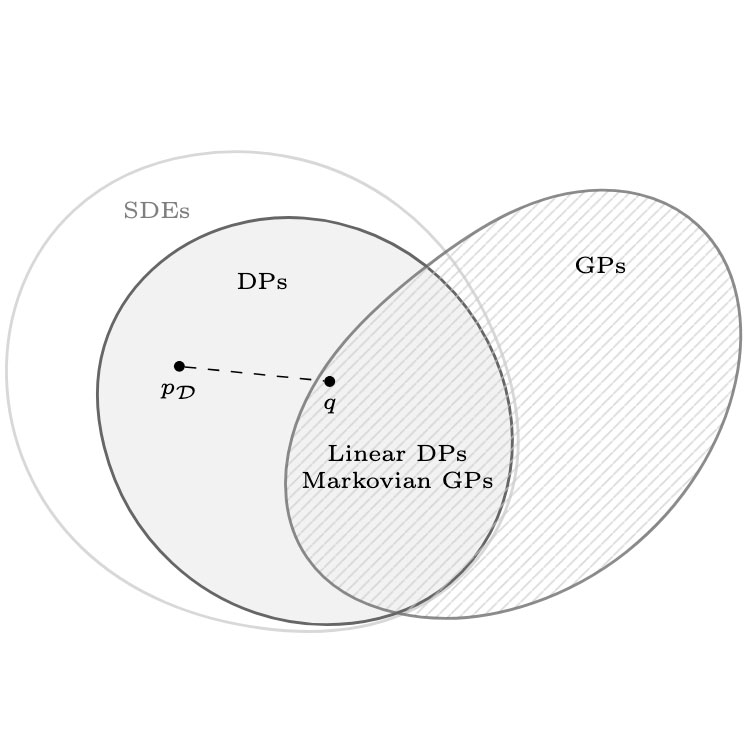

Previously Arno worked as a team-lead in industry (2015–2017) and held an Academy of Finland post-doctoral fellowship (2017–2020). He has also held visiting researcher positions in Prof. Neil Lawrence’s group at the University of Sheffield (2013), the Computational and Biological Learning Lab (CBL) at the University of Cambridge (2017–2018), and Prof. Thomas Schön’s group at Uppsala University (2019). He is a co-author of the book Applied Stochastic Differential Equations, published by Cambridge University Press.

Research Interests

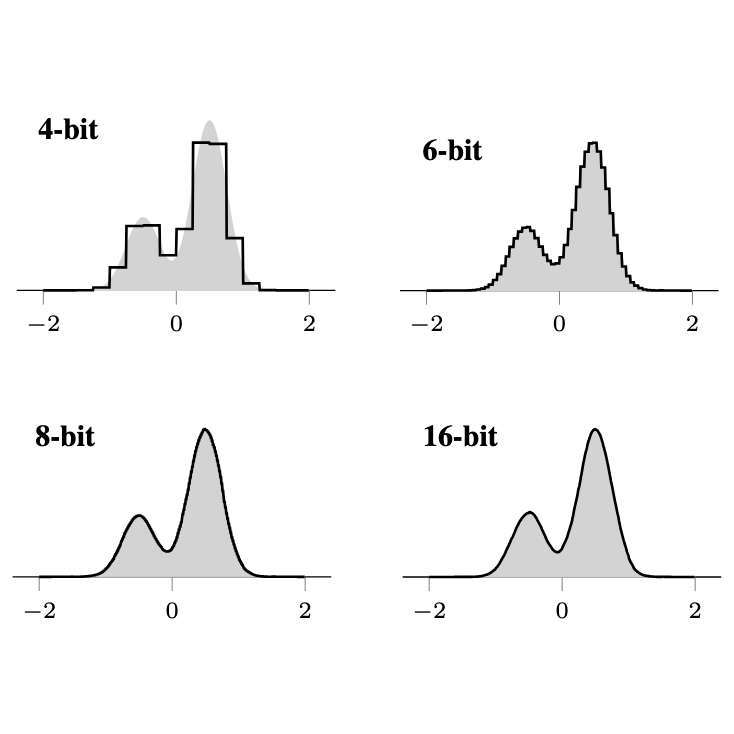

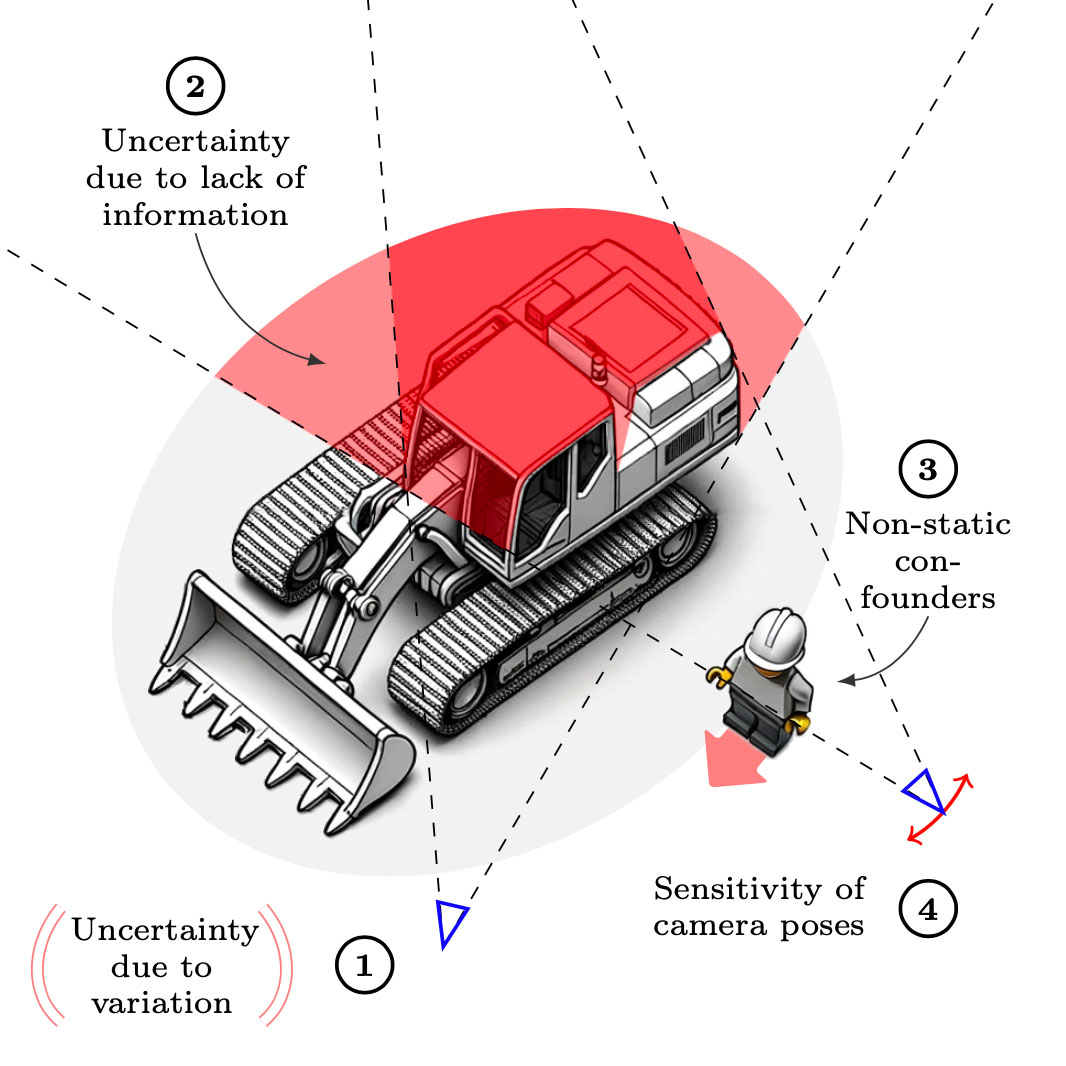

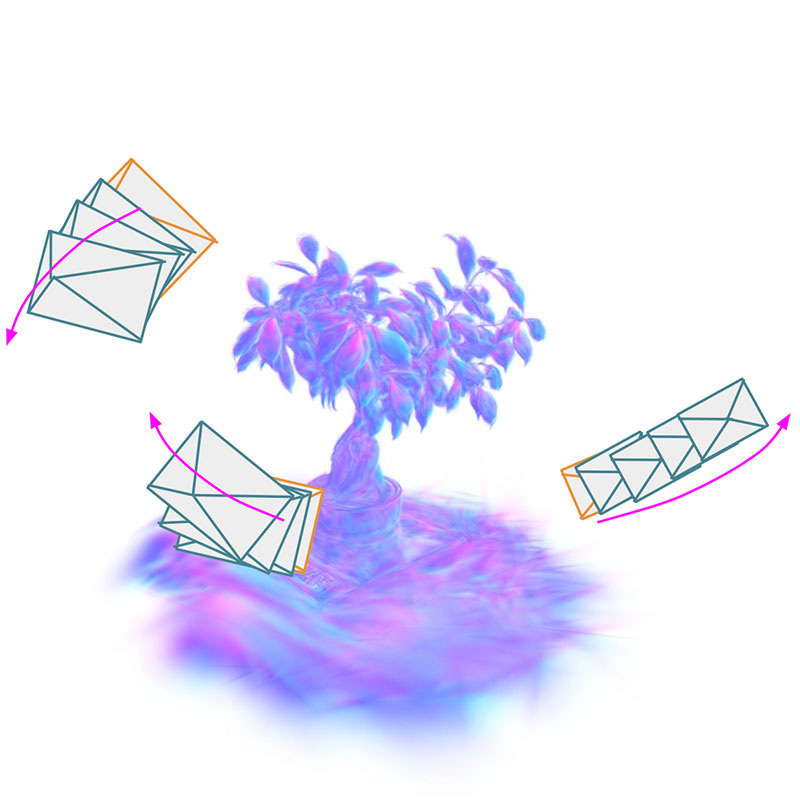

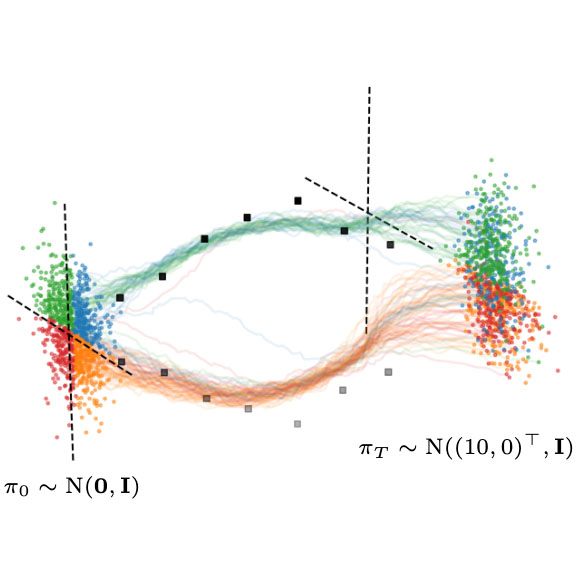

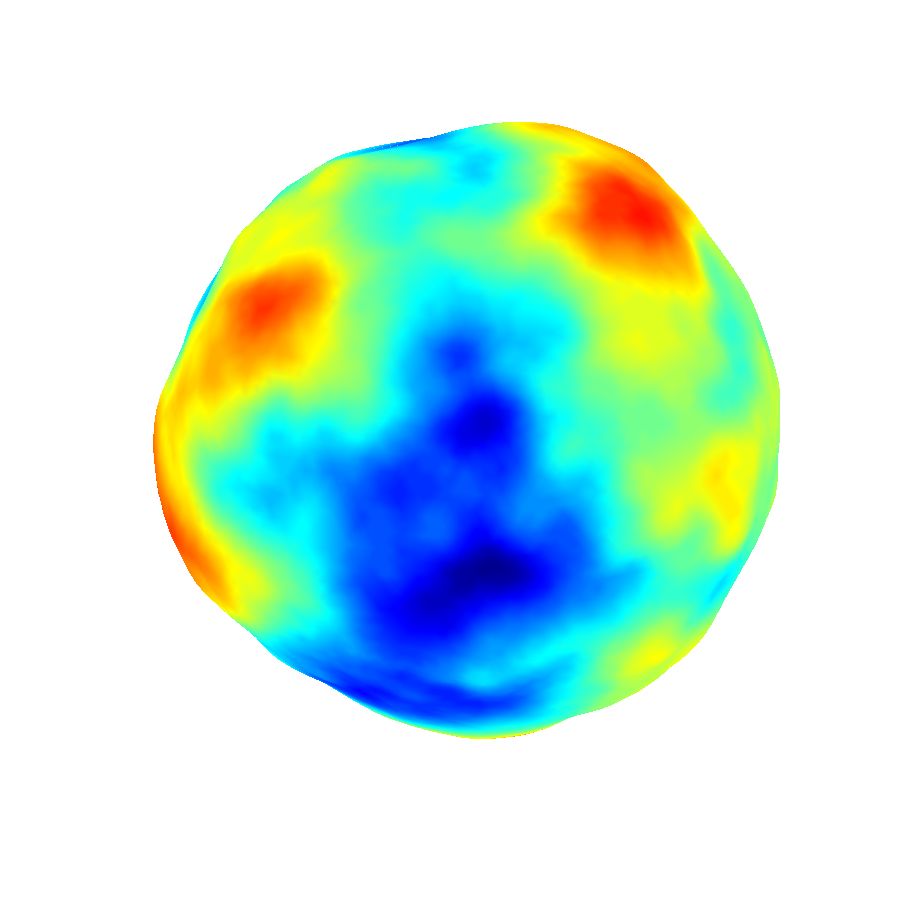

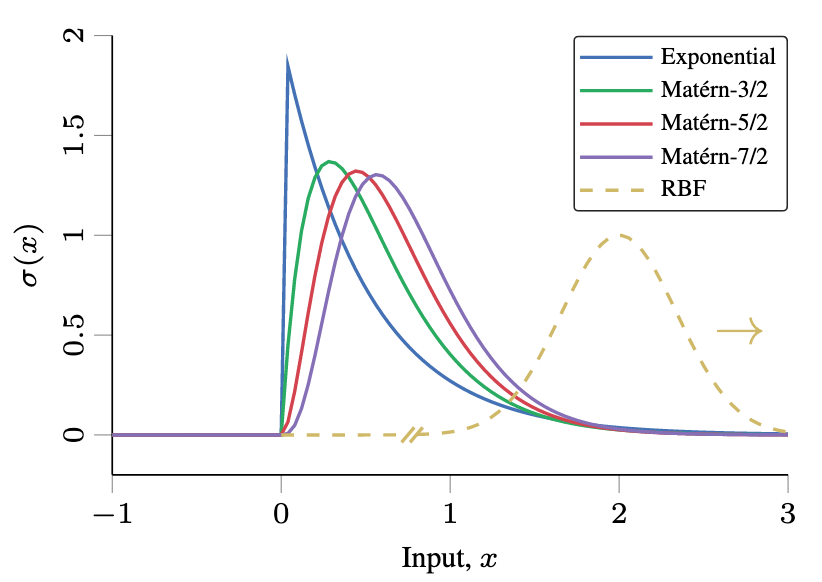

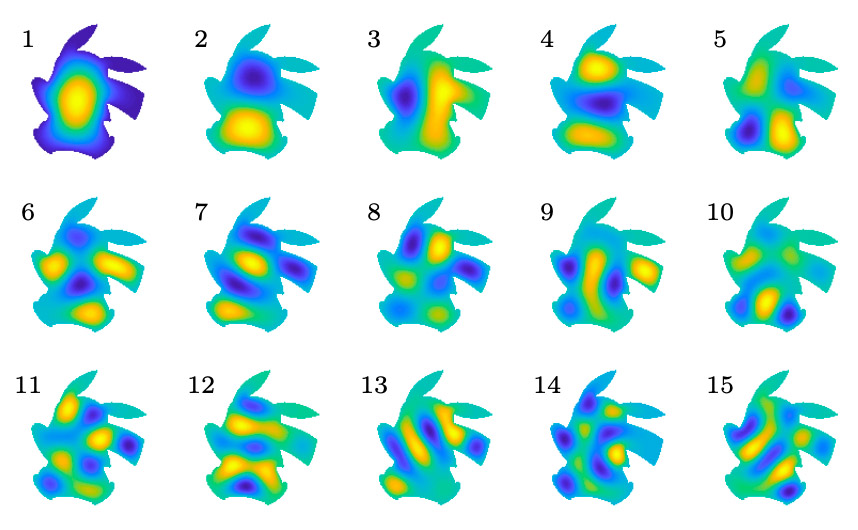

Machine Learning: Probabilistic methods, generative modelling, data-efficient machine learning, Gaussian processes, diffusion models, approximate inference, real-time inference, reinforcement learning.

Signal Processing: Sequential methods, nonlinear state estimation, Kalman filtering, time-series modelling, dynamical systems, system identification.

Sensor Fusion: Real-time inference, computer vision, inter-frame reasoning, perception, odometry, tracking.