Note

Go to the end to download the full example code.

Source-level RSA using a searchlight on surface data#

This example demonstrates how to perform representational similarity analysis (RSA) on source localized MEG data, using a searchlight approach.

In the searchlight approach, representational similarity is computed between the model and searchlight “patches”. A patch is defined by a seed vertex on the cortex and all vertices within a given radius. By default, patches are created using each vertex as a seed point, so you can think of it as a “searchlight” that scans along the cortex.

The radius of a searchlight can be defined in space, in time, or both. In this example, our searchlight will have a spatial radius of 2 cm. and a temporal radius of 20 ms.

The dataset will be the MNE-sample dataset: a collection of 288 epochs in which the participant was presented with an auditory beep or visual stimulus to either the left or right ear or visual field.

# sphinx_gallery_thumbnail_number=2

import mne

import mne_rsa

# Import required packages

from matplotlib import pyplot as plt

mne.set_log_level(False) # Be less verbose

mne.viz.set_3d_backend("pyvista")

'pyvistaqt'

We’ll be using the data from the MNE-sample set. To speed up computations in this example, we’re going to use one of the sparse source spaces from the testing set.

sample_root = mne.datasets.sample.data_path(verbose=True)

testing_root = mne.datasets.testing.data_path(verbose=True)

sample_path = sample_root / "MEG" / "sample"

testing_path = testing_root / "MEG" / "sample"

subjects_dir = sample_root / "subjects"

Creating epochs from the continuous (raw) data. We downsample to 100 Hz to speed up the RSA computations later on.

raw = mne.io.read_raw_fif(sample_path / "sample_audvis_filt-0-40_raw.fif")

events = mne.read_events(sample_path / "sample_audvis_filt-0-40_raw-eve.fif")

event_id = {"audio/left": 1, "audio/right": 2, "visual/left": 3, "visual/right": 4}

epochs = mne.Epochs(raw, events, event_id, preload=True)

epochs.resample(100)

It’s important that the model RDM and the epochs are in the same order, so that each row in the model RDM will correspond to an epoch. The model RDM will be easier to interpret visually if the data is ordered such that all epochs belonging to the same experimental condition are right next to each-other, so patterns jump out. This can be achieved by first splitting the epochs by experimental condition and then concatenating them together again.

epoch_splits = [

epochs[cl] for cl in ["audio/left", "audio/right", "visual/left", "visual/right"]

]

epochs = mne.concatenate_epochs(epoch_splits)

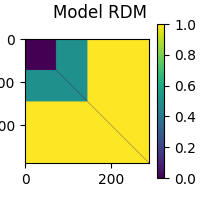

Now that the epochs are in the proper order, we can create a RDM based on the experimental conditions. This type of RDM is referred to as a “sensitivity RDM”. Let’s create a sensitivity RDM that will pick up the left auditory response when RSA-ed against the MEG data. Since we want to capture areas where left beeps generate a large signal, we specify that left beeps should be similar to other left beeps. Since we do not want areas where visual stimuli generate a large signal, we specify that beeps must be different from visual stimuli. Furthermore, since in areas where visual stimuli generate only a small signal, random noise will dominate, we also specify that visual stimuli are different from other visual stimuli. Finally left and right auditory beeps will be somewhat similar.

def sensitivity_metric(event_id_1, event_id_2):

"""Determine similarity between two epochs, given their event ids."""

if event_id_1 == 1 and event_id_2 == 1:

return 0 # Completely similar

if event_id_1 == 2 and event_id_2 == 2:

return 0.5 # Somewhat similar

elif event_id_1 == 1 and event_id_2 == 2:

return 0.5 # Somewhat similar

elif event_id_1 == 2 and event_id_1 == 1:

return 0.5 # Somewhat similar

else:

return 1 # Not similar at all

model_rdm = mne_rsa.compute_rdm(epochs.events[:, 2], metric=sensitivity_metric)

mne_rsa.plot_rdms(model_rdm, title="Model RDM")

<Figure size 200x200 with 2 Axes>

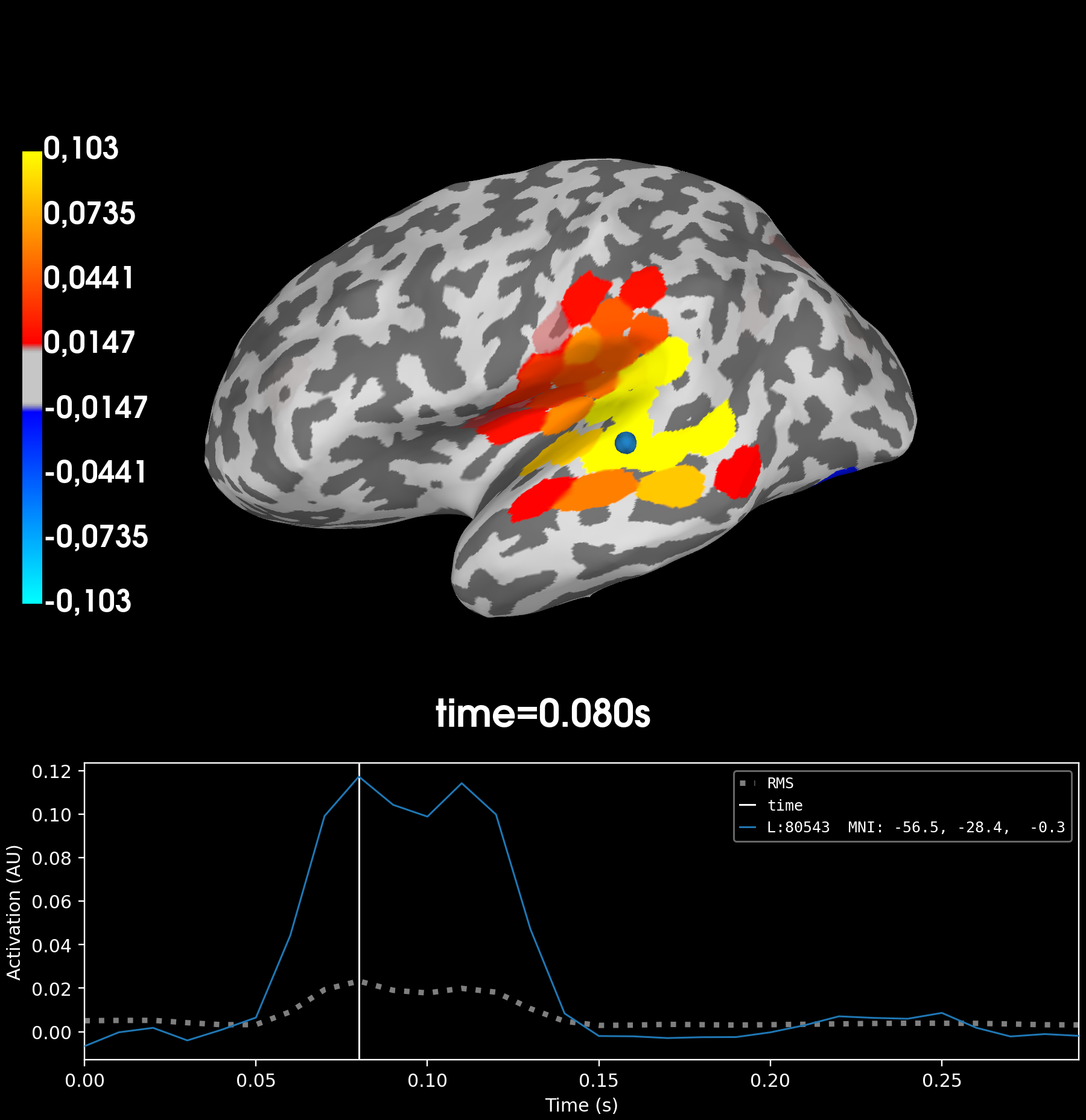

This example is going to be on source-level, so let’s load the inverse operator and apply it to obtain a cortical surface source estimate for each epoch. To speed up the computation, we going to load an inverse operator from the testing dataset that was created using a sparse source space with not too many vertices.

inv = mne.minimum_norm.read_inverse_operator(

testing_path / "sample_audvis_trunc-meg-eeg-oct-4-meg-inv.fif"

)

epochs_stc = mne.minimum_norm.apply_inverse_epochs(epochs, inv, lambda2=0.1111)

Performing the RSA. This will take some time. Consider increasing n_jobs to

parallelize the computation across multiple CPUs.

rsa_vals = mne_rsa.rsa_stcs(

epochs_stc, # The source localized epochs

model_rdm, # The model RDM we constructed above

src=inv["src"], # The inverse operator has our source space

stc_rdm_metric="correlation", # Metric to compute the MEG RDMs

rsa_metric="kendall-tau-a", # Metric to compare model and EEG RDMs

spatial_radius=0.02, # Spatial radius of the searchlight patch

temporal_radius=0.02, # Temporal radius of the searchlight path

tmin=0,

tmax=0.3, # To save time, only analyze this time interval

n_jobs=1, # Only use one CPU core. Increase this for more speed.

verbose=True,

) # Set to True to display a progress bar

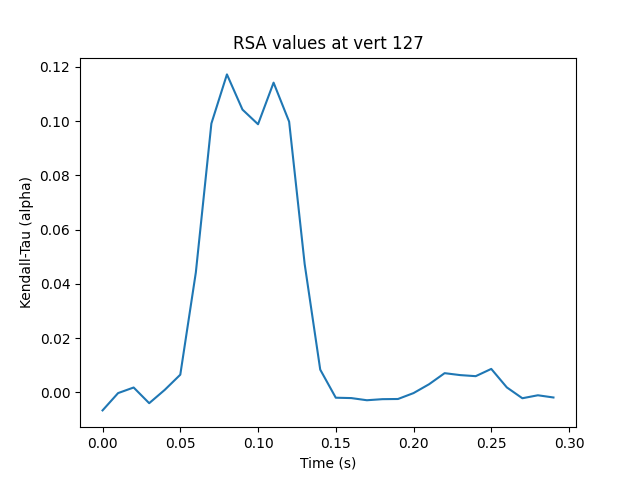

# Find the searchlight patch with highest RSA score

peak_vertex, peak_time = rsa_vals.get_peak(vert_as_index=True)

# Plot the result at the timepoint where the maximum RSA value occurs.

rsa_vals.plot("sample", subjects_dir=subjects_dir, initial_time=peak_time)

Calculating source space distances (limit=20.0 mm)...

Not adding patch information, dist_limit too small

Performing RSA between SourceEstimates and 1 model RDM(s)

Spatial radius: 0.02 meters

Using 498 vertices

Temporal radius: 2 samples

Time interval: 0-0.3 seconds

Number of searchlight patches: 14940

0%| | 0/14940 [00:00<?, ?patch/s]Creating spatio-temporal searchlight patches

0%| | 8/14940 [00:00<03:14, 76.90patch/s]

0%|▏ | 16/14940 [00:00<03:13, 77.00patch/s]

0%|▎ | 25/14940 [00:00<03:09, 78.69patch/s]

0%|▍ | 34/14940 [00:00<03:07, 79.53patch/s]

0%|▍ | 43/14940 [00:00<03:03, 81.35patch/s]

0%|▌ | 52/14940 [00:00<03:06, 79.87patch/s]

0%|▋ | 61/14940 [00:00<03:04, 80.85patch/s]

0%|▊ | 70/14940 [00:00<03:03, 81.17patch/s]

1%|▉ | 79/14940 [00:00<03:05, 80.09patch/s]

1%|▉ | 88/14940 [00:01<03:06, 79.47patch/s]

1%|█ | 96/14940 [00:01<03:09, 78.31patch/s]

1%|█▏ | 104/14940 [00:01<03:08, 78.70patch/s]

1%|█▏ | 113/14940 [00:01<03:07, 79.22patch/s]

1%|█▎ | 122/14940 [00:01<03:03, 80.69patch/s]

1%|█▍ | 131/14940 [00:01<03:05, 79.66patch/s]

1%|█▌ | 139/14940 [00:01<03:07, 78.90patch/s]

1%|█▌ | 147/14940 [00:01<03:09, 77.87patch/s]

1%|█▋ | 155/14940 [00:01<03:11, 77.12patch/s]

1%|█▊ | 164/14940 [00:02<03:08, 78.23patch/s]

1%|█▉ | 172/14940 [00:02<03:08, 78.36patch/s]

1%|█▉ | 181/14940 [00:02<03:05, 79.52patch/s]

1%|██ | 190/14940 [00:02<03:02, 80.66patch/s]

1%|██▏ | 199/14940 [00:02<03:01, 81.05patch/s]

1%|██▎ | 208/14940 [00:02<03:02, 80.70patch/s]

1%|██▍ | 217/14940 [00:02<03:03, 80.41patch/s]

2%|██▍ | 226/14940 [00:02<03:06, 78.89patch/s]

2%|██▌ | 234/14940 [00:02<03:23, 72.36patch/s]

2%|██▋ | 243/14940 [00:03<03:17, 74.53patch/s]

2%|██▊ | 251/14940 [00:03<03:18, 73.99patch/s]

2%|██▊ | 259/14940 [00:03<03:19, 73.46patch/s]

2%|██▉ | 267/14940 [00:03<03:20, 73.36patch/s]

2%|███ | 275/14940 [00:03<03:20, 73.23patch/s]

2%|███▏ | 283/14940 [00:03<03:23, 72.08patch/s]

2%|███▏ | 291/14940 [00:03<03:20, 73.13patch/s]

2%|███▎ | 299/14940 [00:03<03:20, 73.16patch/s]

2%|███▍ | 308/14940 [00:03<03:12, 76.00patch/s]

2%|███▌ | 317/14940 [00:04<03:06, 78.20patch/s]

2%|███▌ | 326/14940 [00:04<03:05, 78.62patch/s]

2%|███▋ | 334/14940 [00:04<03:13, 75.38patch/s]

2%|███▊ | 342/14940 [00:04<03:28, 70.10patch/s]

2%|███▊ | 350/14940 [00:04<03:26, 70.69patch/s]

2%|███▉ | 358/14940 [00:04<03:20, 72.81patch/s]

2%|████ | 366/14940 [00:04<03:26, 70.69patch/s]

3%|████▏ | 374/14940 [00:04<03:31, 68.84patch/s]

3%|████▏ | 381/14940 [00:05<03:33, 68.22patch/s]

3%|████▎ | 388/14940 [00:05<03:34, 67.75patch/s]

3%|████▎ | 395/14940 [00:05<03:34, 67.90patch/s]

3%|████▍ | 403/14940 [00:05<03:26, 70.37patch/s]

3%|████▌ | 412/14940 [00:05<03:16, 73.77patch/s]

3%|████▋ | 420/14940 [00:05<03:17, 73.53patch/s]

3%|████▋ | 429/14940 [00:05<03:10, 76.02patch/s]

3%|████▊ | 438/14940 [00:05<03:05, 78.15patch/s]

3%|████▉ | 447/14940 [00:05<03:00, 80.15patch/s]

3%|█████ | 456/14940 [00:05<02:57, 81.82patch/s]

3%|█████▏ | 465/14940 [00:06<02:54, 82.80patch/s]

3%|█████▏ | 474/14940 [00:06<03:19, 72.53patch/s]

3%|█████▎ | 482/14940 [00:06<03:16, 73.53patch/s]

3%|█████▍ | 491/14940 [00:06<03:09, 76.44patch/s]

3%|█████▌ | 500/14940 [00:06<03:03, 78.56patch/s]

3%|█████▌ | 509/14940 [00:06<03:00, 80.07patch/s]

3%|█████▋ | 518/14940 [00:06<02:58, 80.91patch/s]

4%|█████▊ | 527/14940 [00:06<02:56, 81.51patch/s]

4%|█████▉ | 536/14940 [00:06<02:56, 81.61patch/s]

4%|██████ | 545/14940 [00:07<02:56, 81.58patch/s]

4%|██████ | 554/14940 [00:07<02:55, 82.07patch/s]

4%|██████▏ | 563/14940 [00:07<02:55, 82.11patch/s]

4%|██████▎ | 572/14940 [00:07<02:53, 82.72patch/s]

4%|██████▍ | 581/14940 [00:07<02:52, 83.40patch/s]

4%|██████▌ | 590/14940 [00:07<02:50, 83.93patch/s]

4%|██████▌ | 599/14940 [00:07<02:50, 84.04patch/s]

4%|██████▋ | 608/14940 [00:07<02:49, 84.33patch/s]

4%|██████▊ | 617/14940 [00:07<02:50, 84.22patch/s]

4%|██████▉ | 626/14940 [00:08<02:49, 84.24patch/s]

4%|███████ | 635/14940 [00:08<02:49, 84.50patch/s]

4%|███████ | 644/14940 [00:08<02:49, 84.18patch/s]

4%|███████▏ | 653/14940 [00:08<02:51, 83.33patch/s]

4%|███████▎ | 662/14940 [00:08<02:52, 82.98patch/s]

4%|███████▍ | 671/14940 [00:08<02:51, 82.97patch/s]

5%|███████▌ | 680/14940 [00:08<02:52, 82.81patch/s]

5%|███████▌ | 689/14940 [00:08<02:52, 82.83patch/s]

5%|███████▋ | 698/14940 [00:08<02:51, 82.88patch/s]

5%|███████▊ | 707/14940 [00:09<02:51, 83.00patch/s]

5%|███████▉ | 716/14940 [00:09<02:50, 83.24patch/s]

5%|████████ | 725/14940 [00:09<02:51, 82.73patch/s]

5%|████████ | 734/14940 [00:09<02:50, 83.38patch/s]

5%|████████▏ | 743/14940 [00:09<02:48, 84.10patch/s]

5%|████████▎ | 752/14940 [00:09<02:48, 84.37patch/s]

5%|████████▍ | 761/14940 [00:09<02:48, 84.10patch/s]

5%|████████▌ | 770/14940 [00:09<02:48, 84.17patch/s]

5%|████████▌ | 779/14940 [00:09<02:49, 83.74patch/s]

5%|████████▋ | 788/14940 [00:10<02:50, 83.21patch/s]

5%|████████▊ | 797/14940 [00:10<02:49, 83.67patch/s]

5%|████████▉ | 806/14940 [00:10<02:52, 82.12patch/s]

5%|█████████ | 815/14940 [00:10<02:53, 81.44patch/s]

6%|█████████ | 824/14940 [00:10<02:52, 81.94patch/s]

6%|█████████▏ | 833/14940 [00:10<02:50, 82.97patch/s]

6%|█████████▎ | 842/14940 [00:10<02:50, 82.87patch/s]

6%|█████████▍ | 851/14940 [00:10<02:51, 82.01patch/s]

6%|█████████▍ | 860/14940 [00:10<02:56, 79.77patch/s]

6%|█████████▌ | 868/14940 [00:10<02:57, 79.16patch/s]

6%|█████████▋ | 876/14940 [00:11<02:57, 79.18patch/s]

6%|█████████▊ | 884/14940 [00:11<03:01, 77.28patch/s]

6%|█████████▊ | 893/14940 [00:11<02:58, 78.55patch/s]

6%|█████████▉ | 902/14940 [00:11<02:55, 79.79patch/s]

6%|██████████ | 910/14940 [00:11<02:57, 79.08patch/s]

6%|██████████▏ | 918/14940 [00:11<03:00, 77.76patch/s]

6%|██████████▏ | 926/14940 [00:11<03:06, 75.32patch/s]

6%|██████████▎ | 934/14940 [00:11<03:08, 74.29patch/s]

6%|██████████▍ | 942/14940 [00:11<03:09, 74.01patch/s]

6%|██████████▍ | 950/14940 [00:12<03:08, 74.32patch/s]

6%|██████████▌ | 958/14940 [00:12<03:06, 75.08patch/s]

6%|██████████▋ | 967/14940 [00:12<03:00, 77.35patch/s]

7%|██████████▊ | 975/14940 [00:12<02:59, 77.76patch/s]

7%|██████████▊ | 984/14940 [00:12<02:57, 78.77patch/s]

7%|██████████▉ | 993/14940 [00:12<02:53, 80.39patch/s]

7%|██████████▉ | 1002/14940 [00:12<02:52, 80.94patch/s]

7%|███████████ | 1011/14940 [00:12<02:46, 83.45patch/s]

7%|███████████▏ | 1020/14940 [00:12<02:47, 82.99patch/s]

7%|███████████▎ | 1029/14940 [00:13<02:48, 82.48patch/s]

7%|███████████▍ | 1038/14940 [00:13<02:48, 82.46patch/s]

7%|███████████▍ | 1047/14940 [00:13<03:12, 72.22patch/s]

7%|███████████▌ | 1055/14940 [00:13<03:39, 63.21patch/s]

7%|███████████▋ | 1062/14940 [00:13<03:50, 60.26patch/s]

7%|███████████▋ | 1070/14940 [00:13<03:34, 64.77patch/s]

7%|███████████▊ | 1078/14940 [00:13<03:22, 68.36patch/s]

7%|███████████▉ | 1086/14940 [00:13<03:15, 70.87patch/s]

7%|████████████ | 1094/14940 [00:14<03:10, 72.80patch/s]

7%|████████████ | 1102/14940 [00:14<03:05, 74.64patch/s]

7%|████████████▏ | 1110/14940 [00:14<03:01, 76.01patch/s]

7%|████████████▎ | 1119/14940 [00:14<02:56, 78.29patch/s]

8%|████████████▎ | 1127/14940 [00:14<03:00, 76.67patch/s]

8%|████████████▍ | 1136/14940 [00:14<02:56, 78.03patch/s]

8%|████████████▌ | 1145/14940 [00:14<02:54, 79.25patch/s]

8%|████████████▋ | 1153/14940 [00:14<02:53, 79.34patch/s]

8%|████████████▋ | 1161/14940 [00:14<02:53, 79.23patch/s]

8%|████████████▊ | 1169/14940 [00:14<02:56, 77.81patch/s]

8%|████████████▉ | 1177/14940 [00:15<02:57, 77.54patch/s]

8%|█████████████ | 1185/14940 [00:15<03:26, 66.63patch/s]

8%|█████████████ | 1194/14940 [00:15<03:12, 71.32patch/s]

8%|█████████████▏ | 1202/14940 [00:15<03:07, 73.43patch/s]

8%|█████████████▎ | 1211/14940 [00:15<02:59, 76.50patch/s]

8%|█████████████▍ | 1220/14940 [00:15<02:58, 76.97patch/s]

8%|█████████████▍ | 1228/14940 [00:15<02:56, 77.65patch/s]

8%|█████████████▌ | 1236/14940 [00:15<02:56, 77.44patch/s]

8%|█████████████▋ | 1245/14940 [00:15<02:54, 78.70patch/s]

8%|█████████████▊ | 1254/14940 [00:16<02:50, 80.14patch/s]

8%|█████████████▊ | 1263/14940 [00:16<02:51, 79.81patch/s]

9%|█████████████▉ | 1272/14940 [00:16<02:50, 80.29patch/s]

9%|██████████████ | 1281/14940 [00:16<02:49, 80.64patch/s]

9%|██████████████▏ | 1290/14940 [00:16<02:48, 81.15patch/s]

9%|██████████████▎ | 1299/14940 [00:16<02:47, 81.42patch/s]

9%|██████████████▎ | 1308/14940 [00:16<03:10, 71.72patch/s]

9%|██████████████▍ | 1316/14940 [00:16<03:11, 71.26patch/s]

9%|██████████████▌ | 1325/14940 [00:17<03:03, 74.34patch/s]

9%|██████████████▋ | 1334/14940 [00:17<02:56, 76.96patch/s]

9%|██████████████▋ | 1343/14940 [00:17<02:52, 79.04patch/s]

9%|██████████████▊ | 1352/14940 [00:17<02:49, 80.14patch/s]

9%|██████████████▉ | 1361/14940 [00:17<02:54, 78.03patch/s]

9%|███████████████ | 1369/14940 [00:17<03:04, 73.66patch/s]

9%|███████████████ | 1377/14940 [00:17<03:12, 70.44patch/s]

9%|███████████████▏ | 1385/14940 [00:17<03:21, 67.15patch/s]

9%|███████████████▎ | 1393/14940 [00:17<03:12, 70.34patch/s]

9%|███████████████▍ | 1401/14940 [00:18<03:10, 71.09patch/s]

9%|███████████████▍ | 1409/14940 [00:18<03:04, 73.21patch/s]

9%|███████████████▌ | 1417/14940 [00:18<03:00, 74.79patch/s]

10%|███████████████▋ | 1425/14940 [00:18<03:00, 75.07patch/s]

10%|███████████████▋ | 1433/14940 [00:18<02:58, 75.67patch/s]

10%|███████████████▊ | 1441/14940 [00:18<03:03, 73.64patch/s]

10%|███████████████▉ | 1450/14940 [00:18<03:02, 74.07patch/s]

10%|████████████████ | 1458/14940 [00:18<02:59, 75.04patch/s]

10%|████████████████ | 1466/14940 [00:18<02:57, 76.09patch/s]

10%|████████████████▏ | 1475/14940 [00:19<02:52, 78.11patch/s]

10%|████████████████▎ | 1484/14940 [00:19<02:45, 81.26patch/s]

10%|████████████████▍ | 1493/14940 [00:19<02:42, 82.64patch/s]

10%|████████████████▍ | 1502/14940 [00:19<02:41, 83.34patch/s]

10%|████████████████▌ | 1511/14940 [00:19<02:40, 83.84patch/s]

10%|████████████████▋ | 1520/14940 [00:19<02:40, 83.37patch/s]

10%|████████████████▊ | 1529/14940 [00:19<02:43, 81.78patch/s]

10%|████████████████▉ | 1538/14940 [00:19<02:46, 80.71patch/s]

10%|████████████████▉ | 1547/14940 [00:19<02:46, 80.57patch/s]

10%|█████████████████ | 1556/14940 [00:20<02:46, 80.55patch/s]

10%|█████████████████▏ | 1565/14940 [00:20<02:56, 75.64patch/s]

11%|█████████████████▎ | 1573/14940 [00:20<02:55, 76.18patch/s]

11%|█████████████████▎ | 1581/14940 [00:20<02:53, 77.06patch/s]

11%|█████████████████▍ | 1589/14940 [00:20<02:54, 76.31patch/s]

11%|█████████████████▌ | 1597/14940 [00:20<02:54, 76.64patch/s]

11%|█████████████████▌ | 1605/14940 [00:20<02:54, 76.57patch/s]

11%|█████████████████▋ | 1613/14940 [00:20<03:04, 72.22patch/s]

11%|█████████████████▊ | 1621/14940 [00:20<03:03, 72.60patch/s]

11%|█████████████████▉ | 1629/14940 [00:20<03:00, 73.65patch/s]

11%|█████████████████▉ | 1637/14940 [00:21<03:00, 73.78patch/s]

11%|██████████████████ | 1645/14940 [00:21<03:01, 73.17patch/s]

11%|██████████████████▏ | 1653/14940 [00:21<03:06, 71.09patch/s]

11%|██████████████████▏ | 1661/14940 [00:21<03:06, 71.13patch/s]

11%|██████████████████▎ | 1669/14940 [00:21<03:02, 72.58patch/s]

11%|██████████████████▍ | 1678/14940 [00:21<02:56, 75.23patch/s]

11%|██████████████████▌ | 1686/14940 [00:21<03:01, 73.20patch/s]

11%|██████████████████▌ | 1694/14940 [00:21<03:01, 73.15patch/s]

11%|██████████████████▋ | 1702/14940 [00:21<02:58, 74.03patch/s]

11%|██████████████████▊ | 1711/14940 [00:22<02:53, 76.03patch/s]

12%|██████████████████▉ | 1720/14940 [00:22<02:50, 77.75patch/s]

12%|██████████████████▉ | 1728/14940 [00:22<03:02, 72.38patch/s]

12%|███████████████████ | 1736/14940 [00:22<02:57, 74.21patch/s]

12%|███████████████████▏ | 1745/14940 [00:22<02:50, 77.30patch/s]

12%|███████████████████▎ | 1754/14940 [00:22<02:46, 79.06patch/s]

12%|███████████████████▎ | 1763/14940 [00:22<02:44, 79.93patch/s]

12%|███████████████████▍ | 1772/14940 [00:22<02:44, 79.84patch/s]

12%|███████████████████▌ | 1781/14940 [00:23<02:48, 78.29patch/s]

12%|███████████████████▋ | 1789/14940 [00:23<02:47, 78.50patch/s]

12%|███████████████████▋ | 1797/14940 [00:23<02:46, 78.72patch/s]

12%|███████████████████▊ | 1805/14940 [00:23<02:46, 79.07patch/s]

12%|███████████████████▉ | 1814/14940 [00:23<02:44, 79.62patch/s]

12%|████████████████████ | 1822/14940 [00:23<02:45, 79.50patch/s]

12%|████████████████████ | 1831/14940 [00:23<02:43, 79.96patch/s]

12%|████████████████████▏ | 1840/14940 [00:23<02:41, 81.01patch/s]

12%|████████████████████▎ | 1849/14940 [00:23<02:50, 77.00patch/s]

12%|████████████████████▍ | 1858/14940 [00:23<02:46, 78.57patch/s]

12%|████████████████████▍ | 1867/14940 [00:24<02:43, 79.96patch/s]

13%|████████████████████▌ | 1876/14940 [00:24<02:41, 80.97patch/s]

13%|████████████████████▋ | 1885/14940 [00:24<02:40, 81.31patch/s]

13%|████████████████████▊ | 1894/14940 [00:24<02:50, 76.71patch/s]

13%|████████████████████▉ | 1902/14940 [00:24<02:57, 73.29patch/s]

13%|████████████████████▉ | 1910/14940 [00:24<03:01, 71.86patch/s]

13%|█████████████████████ | 1918/14940 [00:24<03:24, 63.55patch/s]

13%|█████████████████████▏ | 1925/14940 [00:24<03:23, 63.94patch/s]

13%|█████████████████████▏ | 1933/14940 [00:25<03:16, 66.19patch/s]

13%|█████████████████████▎ | 1941/14940 [00:25<03:08, 68.93patch/s]

13%|█████████████████████▍ | 1949/14940 [00:25<03:01, 71.57patch/s]

13%|█████████████████████▍ | 1957/14940 [00:25<02:57, 73.31patch/s]

13%|█████████████████████▌ | 1966/14940 [00:25<02:51, 75.52patch/s]

13%|█████████████████████▋ | 1974/14940 [00:25<02:50, 76.21patch/s]

13%|█████████████████████▊ | 1983/14940 [00:25<02:43, 79.15patch/s]

13%|█████████████████████▊ | 1992/14940 [00:25<02:42, 79.48patch/s]

13%|█████████████████████▉ | 2000/14940 [00:25<02:56, 73.43patch/s]

13%|██████████████████████ | 2008/14940 [00:26<03:04, 70.25patch/s]

13%|██████████████████████▏ | 2016/14940 [00:26<02:58, 72.34patch/s]

14%|██████████████████████▏ | 2025/14940 [00:26<02:51, 75.16patch/s]

14%|██████████████████████▎ | 2033/14940 [00:26<02:49, 76.11patch/s]

14%|██████████████████████▍ | 2042/14940 [00:26<02:45, 77.88patch/s]

14%|██████████████████████▌ | 2051/14940 [00:26<02:45, 77.94patch/s]

14%|██████████████████████▌ | 2059/14940 [00:26<02:47, 76.88patch/s]

14%|██████████████████████▋ | 2068/14940 [00:26<02:44, 78.43patch/s]

14%|██████████████████████▊ | 2077/14940 [00:26<02:41, 79.77patch/s]

14%|██████████████████████▉ | 2086/14940 [00:27<02:39, 80.63patch/s]

14%|██████████████████████▉ | 2095/14940 [00:27<02:43, 78.51patch/s]

14%|███████████████████████ | 2104/14940 [00:27<02:41, 79.57patch/s]

14%|███████████████████████▏ | 2113/14940 [00:27<02:39, 80.46patch/s]

14%|███████████████████████▎ | 2122/14940 [00:27<02:35, 82.26patch/s]

14%|███████████████████████▍ | 2131/14940 [00:27<02:33, 83.30patch/s]

14%|███████████████████████▍ | 2140/14940 [00:27<02:33, 83.60patch/s]

14%|███████████████████████▌ | 2149/14940 [00:27<02:32, 83.65patch/s]

14%|███████████████████████▋ | 2158/14940 [00:27<02:39, 80.21patch/s]

15%|███████████████████████▊ | 2167/14940 [00:28<02:41, 79.26patch/s]

15%|███████████████████████▉ | 2175/14940 [00:28<02:41, 79.19patch/s]

15%|███████████████████████▉ | 2183/14940 [00:28<02:41, 79.19patch/s]

15%|████████████████████████ | 2192/14940 [00:28<02:40, 79.52patch/s]

15%|████████████████████████▏ | 2201/14940 [00:28<02:37, 80.71patch/s]

15%|████████████████████████▎ | 2210/14940 [00:28<02:34, 82.30patch/s]

15%|████████████████████████▎ | 2219/14940 [00:28<02:34, 82.58patch/s]

15%|████████████████████████▍ | 2228/14940 [00:28<02:33, 82.61patch/s]

15%|████████████████████████▌ | 2237/14940 [00:28<02:34, 82.13patch/s]

15%|████████████████████████▋ | 2246/14940 [00:28<02:34, 82.23patch/s]

15%|████████████████████████▊ | 2255/14940 [00:29<02:33, 82.37patch/s]

15%|████████████████████████▊ | 2264/14940 [00:29<02:34, 82.23patch/s]

15%|████████████████████████▉ | 2273/14940 [00:29<02:33, 82.61patch/s]

15%|█████████████████████████ | 2282/14940 [00:29<02:33, 82.31patch/s]

15%|█████████████████████████▏ | 2291/14940 [00:29<02:34, 81.69patch/s]

15%|█████████████████████████▏ | 2300/14940 [00:29<02:36, 80.72patch/s]

15%|█████████████████████████▎ | 2309/14940 [00:29<02:36, 80.75patch/s]

16%|█████████████████████████▍ | 2318/14940 [00:29<02:37, 80.33patch/s]

16%|█████████████████████████▌ | 2327/14940 [00:29<02:37, 80.20patch/s]

16%|█████████████████████████▋ | 2336/14940 [00:30<02:37, 79.82patch/s]

16%|█████████████████████████▋ | 2344/14940 [00:30<02:41, 78.19patch/s]

16%|█████████████████████████▊ | 2353/14940 [00:30<02:38, 79.57patch/s]

16%|█████████████████████████▉ | 2362/14940 [00:30<02:35, 80.73patch/s]

16%|██████████████████████████ | 2371/14940 [00:30<02:34, 81.49patch/s]

16%|██████████████████████████▏ | 2380/14940 [00:30<02:33, 81.66patch/s]

16%|██████████████████████████▏ | 2389/14940 [00:30<02:43, 76.83patch/s]

16%|██████████████████████████▎ | 2397/14940 [00:30<02:43, 76.84patch/s]

16%|██████████████████████████▍ | 2405/14940 [00:30<02:43, 76.68patch/s]

16%|██████████████████████████▍ | 2413/14940 [00:31<02:43, 76.77patch/s]

16%|██████████████████████████▌ | 2421/14940 [00:31<02:42, 77.22patch/s]

16%|██████████████████████████▋ | 2429/14940 [00:31<02:41, 77.63patch/s]

16%|██████████████████████████▊ | 2437/14940 [00:31<02:43, 76.66patch/s]

16%|██████████████████████████▊ | 2445/14940 [00:31<02:44, 76.10patch/s]

16%|██████████████████████████▉ | 2454/14940 [00:31<02:38, 78.74patch/s]

16%|███████████████████████████ | 2463/14940 [00:31<02:36, 79.65patch/s]

17%|███████████████████████████▏ | 2472/14940 [00:31<02:34, 80.73patch/s]

17%|███████████████████████████▏ | 2481/14940 [00:31<02:36, 79.70patch/s]

17%|███████████████████████████▎ | 2489/14940 [00:32<02:37, 78.85patch/s]

17%|███████████████████████████▍ | 2497/14940 [00:32<02:41, 77.06patch/s]

17%|███████████████████████████▍ | 2505/14940 [00:32<02:43, 76.05patch/s]

17%|███████████████████████████▌ | 2513/14940 [00:32<02:48, 73.77patch/s]

17%|███████████████████████████▋ | 2521/14940 [00:32<02:50, 72.95patch/s]

17%|███████████████████████████▊ | 2529/14940 [00:32<02:45, 74.86patch/s]

17%|███████████████████████████▊ | 2537/14940 [00:32<02:46, 74.63patch/s]

17%|███████████████████████████▉ | 2545/14940 [00:32<02:47, 73.94patch/s]

17%|████████████████████████████ | 2553/14940 [00:32<02:47, 73.91patch/s]

17%|████████████████████████████ | 2561/14940 [00:33<02:52, 71.74patch/s]

17%|████████████████████████████▏ | 2569/14940 [00:33<02:49, 72.91patch/s]

17%|████████████████████████████▎ | 2577/14940 [00:33<02:45, 74.48patch/s]

17%|████████████████████████████▍ | 2586/14940 [00:33<02:41, 76.51patch/s]

17%|████████████████████████████▍ | 2595/14940 [00:33<02:38, 77.96patch/s]

17%|████████████████████████████▌ | 2604/14940 [00:33<02:36, 78.58patch/s]

17%|████████████████████████████▋ | 2613/14940 [00:33<02:36, 78.93patch/s]

18%|████████████████████████████▊ | 2622/14940 [00:33<02:33, 79.99patch/s]

18%|████████████████████████████▉ | 2631/14940 [00:33<02:34, 79.50patch/s]

18%|████████████████████████████▉ | 2639/14940 [00:34<02:40, 76.55patch/s]

18%|█████████████████████████████ | 2648/14940 [00:34<02:39, 77.17patch/s]

18%|█████████████████████████████▏ | 2657/14940 [00:34<02:36, 78.51patch/s]

18%|█████████████████████████████▎ | 2666/14940 [00:34<02:35, 79.11patch/s]

18%|█████████████████████████████▎ | 2675/14940 [00:34<02:33, 79.78patch/s]

18%|█████████████████████████████▍ | 2684/14940 [00:34<02:32, 80.17patch/s]

18%|█████████████████████████████▌ | 2693/14940 [00:34<02:32, 80.08patch/s]

18%|█████████████████████████████▋ | 2702/14940 [00:34<02:32, 80.33patch/s]

18%|█████████████████████████████▊ | 2711/14940 [00:34<02:32, 80.31patch/s]

18%|█████████████████████████████▊ | 2720/14940 [00:35<02:30, 81.24patch/s]

18%|█████████████████████████████▉ | 2729/14940 [00:35<02:44, 74.01patch/s]

18%|██████████████████████████████ | 2738/14940 [00:35<02:41, 75.64patch/s]

18%|██████████████████████████████▏ | 2746/14940 [00:35<02:39, 76.42patch/s]

18%|██████████████████████████████▏ | 2755/14940 [00:35<02:37, 77.56patch/s]

19%|██████████████████████████████▎ | 2764/14940 [00:35<02:35, 78.46patch/s]

19%|██████████████████████████████▍ | 2773/14940 [00:35<02:33, 79.11patch/s]

19%|██████████████████████████████▌ | 2782/14940 [00:35<02:31, 80.12patch/s]

19%|██████████████████████████████▋ | 2791/14940 [00:35<02:33, 78.99patch/s]

19%|██████████████████████████████▋ | 2800/14940 [00:36<02:31, 80.06patch/s]

19%|██████████████████████████████▊ | 2809/14940 [00:36<02:32, 79.63patch/s]

19%|██████████████████████████████▉ | 2818/14940 [00:36<02:31, 79.87patch/s]

19%|███████████████████████████████ | 2826/14940 [00:36<02:32, 79.65patch/s]

19%|███████████████████████████████ | 2834/14940 [00:36<02:31, 79.74patch/s]

19%|███████████████████████████████▏ | 2843/14940 [00:36<02:30, 80.28patch/s]

19%|███████████████████████████████▎ | 2852/14940 [00:36<02:28, 81.18patch/s]

19%|███████████████████████████████▍ | 2861/14940 [00:36<02:27, 82.03patch/s]

19%|███████████████████████████████▌ | 2870/14940 [00:36<02:26, 82.63patch/s]

19%|███████████████████████████████▌ | 2879/14940 [00:37<02:27, 81.55patch/s]

19%|███████████████████████████████▋ | 2888/14940 [00:37<02:26, 82.54patch/s]

19%|███████████████████████████████▊ | 2897/14940 [00:37<02:24, 83.27patch/s]

19%|███████████████████████████████▉ | 2906/14940 [00:37<02:23, 83.83patch/s]

20%|███████████████████████████████▉ | 2915/14940 [00:37<02:22, 84.19patch/s]

20%|████████████████████████████████ | 2924/14940 [00:37<02:22, 84.27patch/s]

20%|████████████████████████████████▏ | 2933/14940 [00:37<02:22, 84.37patch/s]

20%|████████████████████████████████▎ | 2942/14940 [00:37<02:22, 84.17patch/s]

20%|████████████████████████████████▍ | 2951/14940 [00:37<02:25, 82.33patch/s]

20%|████████████████████████████████▍ | 2960/14940 [00:38<02:25, 82.13patch/s]

20%|████████████████████████████████▌ | 2969/14940 [00:38<02:30, 79.57patch/s]

20%|████████████████████████████████▋ | 2977/14940 [00:38<02:36, 76.64patch/s]

20%|████████████████████████████████▊ | 2986/14940 [00:38<02:33, 77.83patch/s]

20%|████████████████████████████████▊ | 2994/14940 [00:38<02:35, 76.58patch/s]

20%|████████████████████████████████▉ | 3002/14940 [00:38<02:36, 76.33patch/s]

20%|█████████████████████████████████ | 3010/14940 [00:38<02:36, 76.12patch/s]

20%|█████████████████████████████████▏ | 3019/14940 [00:38<02:33, 77.45patch/s]

20%|█████████████████████████████████▏ | 3027/14940 [00:38<02:39, 74.49patch/s]

20%|█████████████████████████████████▎ | 3035/14940 [00:39<02:45, 71.76patch/s]

20%|█████████████████████████████████▍ | 3043/14940 [00:39<02:41, 73.89patch/s]

20%|█████████████████████████████████▍ | 3051/14940 [00:39<02:39, 74.34patch/s]

20%|█████████████████████████████████▌ | 3059/14940 [00:39<02:41, 73.74patch/s]

21%|█████████████████████████████████▋ | 3067/14940 [00:39<02:40, 73.92patch/s]

21%|█████████████████████████████████▊ | 3075/14940 [00:39<02:41, 73.63patch/s]

21%|█████████████████████████████████▊ | 3083/14940 [00:39<02:38, 74.79patch/s]

21%|█████████████████████████████████▉ | 3091/14940 [00:39<02:37, 75.16patch/s]

21%|██████████████████████████████████ | 3099/14940 [00:39<02:43, 72.26patch/s]

21%|██████████████████████████████████ | 3107/14940 [00:40<02:43, 72.30patch/s]

21%|██████████████████████████████████▏ | 3115/14940 [00:40<02:40, 73.67patch/s]

21%|██████████████████████████████████▎ | 3123/14940 [00:40<02:38, 74.33patch/s]

21%|██████████████████████████████████▎ | 3131/14940 [00:40<02:36, 75.42patch/s]

21%|██████████████████████████████████▍ | 3139/14940 [00:40<02:39, 74.00patch/s]

21%|██████████████████████████████████▌ | 3147/14940 [00:40<02:42, 72.62patch/s]

21%|██████████████████████████████████▋ | 3155/14940 [00:40<02:41, 72.85patch/s]

21%|██████████████████████████████████▋ | 3163/14940 [00:40<02:40, 73.29patch/s]

21%|██████████████████████████████████▊ | 3171/14940 [00:40<02:38, 74.37patch/s]

21%|██████████████████████████████████▉ | 3179/14940 [00:40<02:36, 75.15patch/s]

21%|██████████████████████████████████▉ | 3187/14940 [00:41<02:46, 70.71patch/s]

21%|███████████████████████████████████ | 3195/14940 [00:41<02:42, 72.34patch/s]

21%|███████████████████████████████████▏ | 3203/14940 [00:41<02:38, 73.87patch/s]

21%|███████████████████████████████████▏ | 3211/14940 [00:41<02:36, 74.96patch/s]

22%|███████████████████████████████████▎ | 3219/14940 [00:41<02:36, 74.81patch/s]

22%|███████████████████████████████████▍ | 3227/14940 [00:41<02:36, 75.03patch/s]

22%|███████████████████████████████████▌ | 3235/14940 [00:41<02:35, 75.08patch/s]

22%|███████████████████████████████████▌ | 3244/14940 [00:41<02:32, 76.80patch/s]

22%|███████████████████████████████████▋ | 3252/14940 [00:41<02:35, 75.16patch/s]

22%|███████████████████████████████████▊ | 3260/14940 [00:42<02:44, 71.18patch/s]

22%|███████████████████████████████████▊ | 3268/14940 [00:42<02:57, 65.80patch/s]

22%|███████████████████████████████████▉ | 3275/14940 [00:42<02:55, 66.48patch/s]

22%|████████████████████████████████████ | 3283/14940 [00:42<02:51, 68.05patch/s]

22%|████████████████████████████████████▏ | 3291/14940 [00:42<02:43, 71.18patch/s]

22%|████████████████████████████████████▏ | 3299/14940 [00:42<02:42, 71.78patch/s]

22%|████████████████████████████████████▎ | 3308/14940 [00:42<02:35, 74.65patch/s]

22%|████████████████████████████████████▍ | 3317/14940 [00:42<02:31, 76.64patch/s]

22%|████████████████████████████████████▍ | 3325/14940 [00:42<02:32, 75.98patch/s]

22%|████████████████████████████████████▌ | 3333/14940 [00:43<02:31, 76.49patch/s]

22%|████████████████████████████████████▋ | 3342/14940 [00:43<02:27, 78.72patch/s]

22%|████████████████████████████████████▊ | 3351/14940 [00:43<02:24, 80.01patch/s]

22%|████████████████████████████████████▉ | 3360/14940 [00:43<02:24, 80.36patch/s]

23%|████████████████████████████████████▉ | 3369/14940 [00:43<02:22, 81.18patch/s]

23%|█████████████████████████████████████ | 3378/14940 [00:43<02:24, 79.95patch/s]

23%|█████████████████████████████████████▏ | 3387/14940 [00:43<02:22, 81.19patch/s]

23%|█████████████████████████████████████▎ | 3396/14940 [00:43<02:21, 81.30patch/s]

23%|█████████████████████████████████████▍ | 3405/14940 [00:43<02:22, 80.77patch/s]

23%|█████████████████████████████████████▍ | 3414/14940 [00:44<02:21, 81.66patch/s]

23%|█████████████████████████████████████▌ | 3423/14940 [00:44<02:23, 80.54patch/s]

23%|█████████████████████████████████████▋ | 3432/14940 [00:44<02:23, 80.44patch/s]

23%|█████████████████████████████████████▊ | 3441/14940 [00:44<02:21, 81.29patch/s]

23%|█████████████████████████████████████▊ | 3450/14940 [00:44<02:20, 81.88patch/s]

23%|█████████████████████████████████████▉ | 3459/14940 [00:44<02:19, 82.12patch/s]

23%|██████████████████████████████████████ | 3468/14940 [00:44<02:19, 82.43patch/s]

23%|██████████████████████████████████████▏ | 3477/14940 [00:44<02:18, 83.01patch/s]

23%|██████████████████████████████████████▎ | 3486/14940 [00:44<02:17, 83.46patch/s]

23%|██████████████████████████████████████▎ | 3495/14940 [00:45<02:16, 83.72patch/s]

23%|██████████████████████████████████████▍ | 3504/14940 [00:45<02:16, 83.98patch/s]

24%|██████████████████████████████████████▌ | 3513/14940 [00:45<02:16, 83.69patch/s]

24%|██████████████████████████████████████▋ | 3522/14940 [00:45<02:16, 83.87patch/s]

24%|██████████████████████████████████████▊ | 3531/14940 [00:45<02:17, 83.15patch/s]

24%|██████████████████████████████████████▊ | 3540/14940 [00:45<02:19, 81.52patch/s]

24%|██████████████████████████████████████▉ | 3549/14940 [00:45<02:19, 81.58patch/s]

24%|███████████████████████████████████████ | 3558/14940 [00:45<02:18, 82.13patch/s]

24%|███████████████████████████████████████▏ | 3567/14940 [00:45<02:18, 82.15patch/s]

24%|███████████████████████████████████████▎ | 3576/14940 [00:46<02:17, 82.38patch/s]

24%|███████████████████████████████████████▎ | 3585/14940 [00:46<02:17, 82.73patch/s]

24%|███████████████████████████████████████▍ | 3594/14940 [00:46<02:17, 82.25patch/s]

24%|███████████████████████████████████████▌ | 3603/14940 [00:46<02:18, 81.95patch/s]

24%|███████████████████████████████████████▋ | 3612/14940 [00:46<02:17, 82.12patch/s]

24%|███████████████████████████████████████▋ | 3621/14940 [00:46<02:17, 82.18patch/s]

24%|███████████████████████████████████████▊ | 3630/14940 [00:46<02:17, 82.42patch/s]

24%|███████████████████████████████████████▉ | 3639/14940 [00:46<02:18, 81.45patch/s]

24%|████████████████████████████████████████ | 3648/14940 [00:46<02:19, 81.10patch/s]

24%|████████████████████████████████████████▏ | 3657/14940 [00:47<02:23, 78.84patch/s]

25%|████████████████████████████████████████▏ | 3666/14940 [00:47<02:21, 79.60patch/s]

25%|████████████████████████████████████████▎ | 3675/14940 [00:47<02:20, 80.34patch/s]

25%|████████████████████████████████████████▍ | 3684/14940 [00:47<02:19, 80.92patch/s]

25%|████████████████████████████████████████▌ | 3693/14940 [00:47<02:18, 81.34patch/s]

25%|████████████████████████████████████████▋ | 3702/14940 [00:47<02:19, 80.53patch/s]

25%|████████████████████████████████████████▋ | 3711/14940 [00:47<02:24, 77.78patch/s]

25%|████████████████████████████████████████▊ | 3720/14940 [00:47<02:23, 78.14patch/s]

25%|████████████████████████████████████████▉ | 3728/14940 [00:47<02:26, 76.66patch/s]

25%|█████████████████████████████████████████ | 3736/14940 [00:48<02:27, 76.01patch/s]

25%|█████████████████████████████████████████ | 3744/14940 [00:48<02:25, 77.04patch/s]

25%|█████████████████████████████████████████▏ | 3753/14940 [00:48<02:21, 78.87patch/s]

25%|█████████████████████████████████████████▎ | 3762/14940 [00:48<02:19, 79.92patch/s]

25%|█████████████████████████████████████████▍ | 3771/14940 [00:48<02:19, 80.35patch/s]

25%|█████████████████████████████████████████▍ | 3780/14940 [00:48<02:17, 81.23patch/s]

25%|█████████████████████████████████████████▌ | 3789/14940 [00:48<02:16, 81.46patch/s]

25%|█████████████████████████████████████████▋ | 3798/14940 [00:48<02:17, 81.16patch/s]

25%|█████████████████████████████████████████▊ | 3807/14940 [00:48<02:17, 81.03patch/s]

26%|█████████████████████████████████████████▉ | 3816/14940 [00:49<02:16, 81.49patch/s]

26%|█████████████████████████████████████████▉ | 3825/14940 [00:49<02:16, 81.61patch/s]

26%|██████████████████████████████████████████ | 3834/14940 [00:49<02:15, 81.76patch/s]

26%|██████████████████████████████████████████▏ | 3843/14940 [00:49<02:16, 81.53patch/s]

26%|██████████████████████████████████████████▎ | 3852/14940 [00:49<02:16, 81.24patch/s]

26%|██████████████████████████████████████████▍ | 3861/14940 [00:49<02:15, 81.52patch/s]

26%|██████████████████████████████████████████▍ | 3870/14940 [00:49<02:15, 81.57patch/s]

26%|██████████████████████████████████████████▌ | 3879/14940 [00:49<02:16, 80.79patch/s]

26%|██████████████████████████████████████████▋ | 3888/14940 [00:49<02:32, 72.63patch/s]

26%|██████████████████████████████████████████▊ | 3897/14940 [00:50<02:26, 75.13patch/s]

26%|██████████████████████████████████████████▉ | 3906/14940 [00:50<02:23, 76.79patch/s]

26%|██████████████████████████████████████████▉ | 3914/14940 [00:50<02:22, 77.28patch/s]

26%|███████████████████████████████████████████ | 3922/14940 [00:50<02:25, 75.77patch/s]

26%|███████████████████████████████████████████▏ | 3930/14940 [00:50<02:33, 71.91patch/s]

26%|███████████████████████████████████████████▏ | 3938/14940 [00:50<02:46, 66.06patch/s]

26%|███████████████████████████████████████████▎ | 3945/14940 [00:50<02:47, 65.84patch/s]

26%|███████████████████████████████████████████▍ | 3952/14940 [00:50<02:46, 66.09patch/s]

26%|███████████████████████████████████████████▍ | 3959/14940 [00:50<02:43, 67.01patch/s]

27%|███████████████████████████████████████████▌ | 3968/14940 [00:51<02:33, 71.26patch/s]

27%|███████████████████████████████████████████▋ | 3976/14940 [00:51<02:38, 69.20patch/s]

27%|███████████████████████████████████████████▋ | 3983/14940 [00:51<02:50, 64.18patch/s]

27%|███████████████████████████████████████████▊ | 3990/14940 [00:51<02:56, 62.06patch/s]

27%|███████████████████████████████████████████▉ | 3998/14940 [00:51<02:44, 66.52patch/s]

27%|███████████████████████████████████████████▉ | 4006/14940 [00:51<02:37, 69.32patch/s]

27%|████████████████████████████████████████████ | 4015/14940 [00:51<02:29, 73.31patch/s]

27%|████████████████████████████████████████████▏ | 4023/14940 [00:51<02:25, 75.00patch/s]

27%|████████████████████████████████████████████▏ | 4031/14940 [00:52<02:38, 68.98patch/s]

27%|████████████████████████████████████████████▎ | 4039/14940 [00:52<02:49, 64.39patch/s]

27%|████████████████████████████████████████████▍ | 4046/14940 [00:52<02:47, 65.21patch/s]

27%|████████████████████████████████████████████▌ | 4054/14940 [00:52<02:38, 68.63patch/s]

27%|████████████████████████████████████████████▌ | 4062/14940 [00:52<02:33, 70.79patch/s]

27%|████████████████████████████████████████████▋ | 4071/14940 [00:52<02:27, 73.93patch/s]

27%|████████████████████████████████████████████▊ | 4079/14940 [00:52<02:24, 75.31patch/s]

27%|████████████████████████████████████████████▊ | 4087/14940 [00:52<02:23, 75.49patch/s]

27%|████████████████████████████████████████████▉ | 4096/14940 [00:52<02:20, 77.24patch/s]

27%|█████████████████████████████████████████████ | 4105/14940 [00:53<02:17, 78.52patch/s]

28%|█████████████████████████████████████████████▏ | 4113/14940 [00:53<02:18, 77.89patch/s]

28%|█████████████████████████████████████████████▏ | 4121/14940 [00:53<02:18, 77.98patch/s]

28%|█████████████████████████████████████████████▎ | 4130/14940 [00:53<02:15, 79.49patch/s]

28%|█████████████████████████████████████████████▍ | 4139/14940 [00:53<02:14, 80.41patch/s]

28%|█████████████████████████████████████████████▌ | 4148/14940 [00:53<02:15, 79.40patch/s]

28%|█████████████████████████████████████████████▋ | 4157/14940 [00:53<02:14, 80.11patch/s]

28%|█████████████████████████████████████████████▋ | 4166/14940 [00:53<02:12, 81.09patch/s]

28%|█████████████████████████████████████████████▊ | 4175/14940 [00:53<02:11, 81.94patch/s]

28%|█████████████████████████████████████████████▉ | 4184/14940 [00:53<02:14, 80.26patch/s]

28%|██████████████████████████████████████████████ | 4193/14940 [00:54<02:12, 81.04patch/s]

28%|██████████████████████████████████████████████▏ | 4202/14940 [00:54<02:12, 81.17patch/s]

28%|██████████████████████████████████████████████▏ | 4211/14940 [00:54<02:11, 81.65patch/s]

28%|██████████████████████████████████████████████▎ | 4220/14940 [00:54<02:11, 81.64patch/s]

28%|██████████████████████████████████████████████▍ | 4229/14940 [00:54<02:10, 81.77patch/s]

28%|██████████████████████████████████████████████▌ | 4238/14940 [00:54<02:10, 82.01patch/s]

28%|██████████████████████████████████████████████▌ | 4247/14940 [00:54<02:09, 82.49patch/s]

28%|██████████████████████████████████████████████▋ | 4256/14940 [00:54<02:09, 82.46patch/s]

29%|██████████████████████████████████████████████▊ | 4265/14940 [00:54<02:09, 82.18patch/s]

29%|██████████████████████████████████████████████▉ | 4274/14940 [00:55<02:24, 73.76patch/s]

29%|███████████████████████████████████████████████ | 4282/14940 [00:55<02:21, 75.19patch/s]

29%|███████████████████████████████████████████████ | 4291/14940 [00:55<02:18, 76.73patch/s]

29%|███████████████████████████████████████████████▏ | 4299/14940 [00:55<02:29, 71.41patch/s]

29%|███████████████████████████████████████████████▎ | 4307/14940 [00:55<02:26, 72.48patch/s]

29%|███████████████████████████████████████████████▎ | 4315/14940 [00:55<02:23, 74.01patch/s]

29%|███████████████████████████████████████████████▍ | 4324/14940 [00:55<02:18, 76.49patch/s]

29%|███████████████████████████████████████████████▌ | 4333/14940 [00:55<02:16, 77.95patch/s]

29%|███████████████████████████████████████████████▋ | 4342/14940 [00:56<02:14, 78.92patch/s]

29%|███████████████████████████████████████████████▊ | 4351/14940 [00:56<02:12, 79.78patch/s]

29%|███████████████████████████████████████████████▊ | 4360/14940 [00:56<02:11, 80.21patch/s]

29%|███████████████████████████████████████████████▉ | 4369/14940 [00:56<02:11, 80.43patch/s]

29%|████████████████████████████████████████████████ | 4378/14940 [00:56<02:10, 80.77patch/s]

29%|████████████████████████████████████████████████▏ | 4387/14940 [00:56<02:08, 81.84patch/s]

29%|████████████████████████████████████████████████▎ | 4396/14940 [00:56<02:08, 81.94patch/s]

29%|████████████████████████████████████████████████▎ | 4405/14940 [00:56<02:07, 82.78patch/s]

30%|████████████████████████████████████████████████▍ | 4414/14940 [00:56<02:06, 83.49patch/s]

30%|████████████████████████████████████████████████▌ | 4423/14940 [00:56<02:06, 83.00patch/s]

30%|████████████████████████████████████████████████▋ | 4432/14940 [00:57<02:11, 79.63patch/s]

30%|████████████████████████████████████████████████▋ | 4441/14940 [00:57<02:10, 80.22patch/s]

30%|████████████████████████████████████████████████▊ | 4450/14940 [00:57<02:09, 81.10patch/s]

30%|████████████████████████████████████████████████▉ | 4459/14940 [00:57<02:08, 81.31patch/s]

30%|█████████████████████████████████████████████████ | 4468/14940 [00:57<02:07, 81.90patch/s]

30%|█████████████████████████████████████████████████▏ | 4477/14940 [00:57<02:07, 82.09patch/s]

30%|█████████████████████████████████████████████████▏ | 4486/14940 [00:57<02:07, 82.10patch/s]

30%|█████████████████████████████████████████████████▎ | 4495/14940 [00:57<02:07, 82.18patch/s]

30%|█████████████████████████████████████████████████▍ | 4504/14940 [00:57<02:06, 82.29patch/s]

30%|█████████████████████████████████████████████████▌ | 4513/14940 [00:58<02:06, 82.70patch/s]

30%|█████████████████████████████████████████████████▋ | 4522/14940 [00:58<02:05, 83.11patch/s]

30%|█████████████████████████████████████████████████▋ | 4531/14940 [00:58<02:04, 83.28patch/s]

30%|█████████████████████████████████████████████████▊ | 4540/14940 [00:58<02:08, 81.15patch/s]

30%|█████████████████████████████████████████████████▉ | 4549/14940 [00:58<02:07, 81.23patch/s]

31%|██████████████████████████████████████████████████ | 4558/14940 [00:58<02:09, 80.19patch/s]

31%|██████████████████████████████████████████████████▏ | 4567/14940 [00:58<02:08, 80.52patch/s]

31%|██████████████████████████████████████████████████▏ | 4576/14940 [00:58<02:07, 81.22patch/s]

31%|██████████████████████████████████████████████████▎ | 4585/14940 [00:58<02:08, 80.51patch/s]

31%|██████████████████████████████████████████████████▍ | 4594/14940 [00:59<02:07, 81.22patch/s]

31%|██████████████████████████████████████████████████▌ | 4603/14940 [00:59<02:06, 81.59patch/s]

31%|██████████████████████████████████████████████████▋ | 4612/14940 [00:59<02:11, 78.60patch/s]

31%|██████████████████████████████████████████████████▋ | 4620/14940 [00:59<02:25, 71.17patch/s]

31%|██████████████████████████████████████████████████▊ | 4628/14940 [00:59<02:23, 71.81patch/s]

31%|██████████████████████████████████████████████████▉ | 4636/14940 [00:59<02:27, 70.04patch/s]

31%|██████████████████████████████████████████████████▉ | 4644/14940 [00:59<02:47, 61.44patch/s]

31%|███████████████████████████████████████████████████ | 4652/14940 [00:59<02:36, 65.75patch/s]

31%|███████████████████████████████████████████████████▏ | 4661/14940 [01:00<02:25, 70.41patch/s]

31%|███████████████████████████████████████████████████▎ | 4669/14940 [01:00<02:21, 72.70patch/s]

31%|███████████████████████████████████████████████████▎ | 4677/14940 [01:00<02:17, 74.60patch/s]

31%|███████████████████████████████████████████████████▍ | 4686/14940 [01:00<02:13, 76.87patch/s]

31%|███████████████████████████████████████████████████▌ | 4695/14940 [01:00<02:09, 79.19patch/s]

31%|███████████████████████████████████████████████████▋ | 4704/14940 [01:00<02:06, 80.99patch/s]

32%|███████████████████████████████████████████████████▋ | 4713/14940 [01:00<02:02, 83.31patch/s]

32%|███████████████████████████████████████████████████▊ | 4722/14940 [01:00<02:11, 77.63patch/s]

32%|███████████████████████████████████████████████████▉ | 4730/14940 [01:00<02:22, 71.44patch/s]

32%|████████████████████████████████████████████████████ | 4738/14940 [01:01<02:20, 72.45patch/s]

32%|████████████████████████████████████████████████████ | 4747/14940 [01:01<02:16, 74.65patch/s]

32%|████████████████████████████████████████████████████▏ | 4756/14940 [01:01<02:12, 76.58patch/s]

32%|████████████████████████████████████████████████████▎ | 4764/14940 [01:01<02:11, 77.36patch/s]

32%|████████████████████████████████████████████████████▍ | 4772/14940 [01:01<02:10, 77.66patch/s]

32%|████████████████████████████████████████████████████▍ | 4780/14940 [01:01<02:12, 76.74patch/s]

32%|████████████████████████████████████████████████████▌ | 4788/14940 [01:01<02:13, 75.80patch/s]

32%|████████████████████████████████████████████████████▋ | 4796/14940 [01:01<02:12, 76.71patch/s]

32%|████████████████████████████████████████████████████▋ | 4804/14940 [01:01<02:12, 76.64patch/s]

32%|████████████████████████████████████████████████████▊ | 4812/14940 [01:02<02:13, 75.78patch/s]

32%|████████████████████████████████████████████████████▉ | 4821/14940 [01:02<02:10, 77.70patch/s]

32%|█████████████████████████████████████████████████████ | 4830/14940 [01:02<02:08, 78.86patch/s]

32%|█████████████████████████████████████████████████████ | 4838/14940 [01:02<02:12, 76.39patch/s]

32%|█████████████████████████████████████████████████████▏ | 4846/14940 [01:02<02:12, 76.14patch/s]

32%|█████████████████████████████████████████████████████▎ | 4854/14940 [01:02<02:19, 72.14patch/s]

33%|█████████████████████████████████████████████████████▎ | 4862/14940 [01:02<02:18, 72.74patch/s]

33%|█████████████████████████████████████████████████████▍ | 4870/14940 [01:02<02:17, 73.12patch/s]

33%|█████████████████████████████████████████████████████▌ | 4878/14940 [01:02<02:21, 70.89patch/s]

33%|█████████████████████████████████████████████████████▋ | 4886/14940 [01:03<02:19, 72.28patch/s]

33%|█████████████████████████████████████████████████████▋ | 4894/14940 [01:03<02:23, 70.24patch/s]

33%|█████████████████████████████████████████████████████▊ | 4902/14940 [01:03<02:22, 70.28patch/s]

33%|█████████████████████████████████████████████████████▉ | 4910/14940 [01:03<02:18, 72.19patch/s]

33%|█████████████████████████████████████████████████████▉ | 4918/14940 [01:03<02:15, 74.11patch/s]

33%|██████████████████████████████████████████████████████ | 4927/14940 [01:03<02:11, 76.12patch/s]

33%|██████████████████████████████████████████████████████▏ | 4936/14940 [01:03<02:09, 77.50patch/s]

33%|██████████████████████████████████████████████████████▎ | 4944/14940 [01:03<02:31, 65.92patch/s]

33%|██████████████████████████████████████████████████████▎ | 4952/14940 [01:03<02:24, 68.97patch/s]

33%|██████████████████████████████████████████████████████▍ | 4960/14940 [01:04<02:21, 70.35patch/s]

33%|██████████████████████████████████████████████████████▌ | 4968/14940 [01:04<02:20, 71.18patch/s]

33%|██████████████████████████████████████████████████████▌ | 4976/14940 [01:04<02:18, 71.73patch/s]

33%|██████████████████████████████████████████████████████▋ | 4984/14940 [01:04<02:23, 69.47patch/s]

33%|██████████████████████████████████████████████████████▊ | 4992/14940 [01:04<02:26, 68.03patch/s]

33%|██████████████████████████████████████████████████████▉ | 4999/14940 [01:04<02:48, 59.14patch/s]

34%|██████████████████████████████████████████████████████▉ | 5007/14940 [01:04<02:37, 62.96patch/s]

34%|███████████████████████████████████████████████████████ | 5015/14940 [01:04<02:28, 66.83patch/s]

34%|███████████████████████████████████████████████████████▏ | 5023/14940 [01:05<02:22, 69.40patch/s]

34%|███████████████████████████████████████████████████████▏ | 5031/14940 [01:05<02:18, 71.59patch/s]

34%|███████████████████████████████████████████████████████▎ | 5039/14940 [01:05<02:14, 73.40patch/s]

34%|███████████████████████████████████████████████████████▍ | 5047/14940 [01:05<02:11, 75.14patch/s]

34%|███████████████████████████████████████████████████████▍ | 5055/14940 [01:05<02:10, 75.92patch/s]

34%|███████████████████████████████████████████████████████▌ | 5063/14940 [01:05<02:10, 75.68patch/s]

34%|███████████████████████████████████████████████████████▋ | 5071/14940 [01:05<02:08, 76.76patch/s]

34%|███████████████████████████████████████████████████████▊ | 5079/14940 [01:05<02:17, 71.57patch/s]

34%|███████████████████████████████████████████████████████▊ | 5087/14940 [01:05<02:17, 71.63patch/s]

34%|███████████████████████████████████████████████████████▉ | 5095/14940 [01:05<02:15, 72.83patch/s]

34%|████████████████████████████████████████████████████████ | 5103/14940 [01:06<02:17, 71.64patch/s]

34%|████████████████████████████████████████████████████████ | 5112/14940 [01:06<02:11, 74.97patch/s]

34%|████████████████████████████████████████████████████████▏ | 5120/14940 [01:06<02:08, 76.30patch/s]

34%|████████████████████████████████████████████████████████▎ | 5129/14940 [01:06<02:06, 77.44patch/s]

34%|████████████████████████████████████████████████████████▍ | 5137/14940 [01:06<02:05, 78.14patch/s]

34%|████████████████████████████████████████████████████████▍ | 5145/14940 [01:06<02:09, 75.88patch/s]

34%|████████████████████████████████████████████████████████▌ | 5153/14940 [01:06<02:21, 69.06patch/s]

35%|████████████████████████████████████████████████████████▋ | 5161/14940 [01:06<02:22, 68.54patch/s]

35%|████████████████████████████████████████████████████████▋ | 5169/14940 [01:07<02:18, 70.73patch/s]

35%|████████████████████████████████████████████████████████▊ | 5177/14940 [01:07<02:16, 71.34patch/s]

35%|████████████████████████████████████████████████████████▉ | 5186/14940 [01:07<02:10, 74.86patch/s]

35%|█████████████████████████████████████████████████████████ | 5195/14940 [01:07<02:06, 77.17patch/s]

35%|█████████████████████████████████████████████████████████▏ | 5204/14940 [01:07<02:05, 77.74patch/s]

35%|█████████████████████████████████████████████████████████▏ | 5213/14940 [01:07<02:02, 79.63patch/s]

35%|█████████████████████████████████████████████████████████▎ | 5222/14940 [01:07<02:02, 79.45patch/s]

35%|█████████████████████████████████████████████████████████▍ | 5231/14940 [01:07<02:00, 80.85patch/s]

35%|█████████████████████████████████████████████████████████▌ | 5240/14940 [01:07<01:58, 82.07patch/s]

35%|█████████████████████████████████████████████████████████▌ | 5249/14940 [01:07<01:58, 81.66patch/s]

35%|█████████████████████████████████████████████████████████▋ | 5258/14940 [01:08<01:59, 81.35patch/s]

35%|█████████████████████████████████████████████████████████▊ | 5267/14940 [01:08<02:02, 79.15patch/s]

35%|█████████████████████████████████████████████████████████▉ | 5276/14940 [01:08<02:00, 79.99patch/s]

35%|██████████████████████████████████████████████████████████ | 5285/14940 [01:08<02:01, 79.30patch/s]

35%|██████████████████████████████████████████████████████████ | 5294/14940 [01:08<02:00, 79.95patch/s]

35%|██████████████████████████████████████████████████████████▏ | 5303/14940 [01:08<02:01, 79.11patch/s]

36%|██████████████████████████████████████████████████████████▎ | 5312/14940 [01:08<02:00, 80.07patch/s]

36%|██████████████████████████████████████████████████████████▍ | 5321/14940 [01:08<02:00, 80.14patch/s]

36%|██████████████████████████████████████████████████████████▌ | 5330/14940 [01:09<01:58, 81.13patch/s]

36%|██████████████████████████████████████████████████████████▌ | 5339/14940 [01:09<01:58, 80.80patch/s]

36%|██████████████████████████████████████████████████████████▋ | 5348/14940 [01:09<01:59, 80.45patch/s]

36%|██████████████████████████████████████████████████████████▊ | 5357/14940 [01:09<01:58, 81.16patch/s]

36%|██████████████████████████████████████████████████████████▉ | 5366/14940 [01:09<01:57, 81.66patch/s]

36%|███████████████████████████████████████████████████████████ | 5375/14940 [01:09<01:56, 82.18patch/s]

36%|███████████████████████████████████████████████████████████ | 5384/14940 [01:09<01:57, 81.04patch/s]

36%|███████████████████████████████████████████████████████████▏ | 5393/14940 [01:09<01:57, 81.59patch/s]

36%|███████████████████████████████████████████████████████████▎ | 5402/14940 [01:09<01:57, 81.32patch/s]

36%|███████████████████████████████████████████████████████████▍ | 5411/14940 [01:09<01:57, 81.29patch/s]

36%|███████████████████████████████████████████████████████████▍ | 5420/14940 [01:10<02:02, 77.46patch/s]

36%|███████████████████████████████████████████████████████████▌ | 5429/14940 [01:10<02:00, 78.72patch/s]

36%|███████████████████████████████████████████████████████████▋ | 5438/14940 [01:10<01:58, 79.90patch/s]

36%|███████████████████████████████████████████████████████████▊ | 5447/14940 [01:10<01:58, 79.81patch/s]

37%|███████████████████████████████████████████████████████████▉ | 5456/14940 [01:10<02:04, 76.31patch/s]

37%|███████████████████████████████████████████████████████████▉ | 5464/14940 [01:10<02:09, 72.98patch/s]

37%|████████████████████████████████████████████████████████████ | 5472/14940 [01:10<02:10, 72.35patch/s]

37%|████████████████████████████████████████████████████████████▏ | 5481/14940 [01:10<02:05, 75.44patch/s]

37%|████████████████████████████████████████████████████████████▎ | 5489/14940 [01:11<02:03, 76.65patch/s]

37%|████████████████████████████████████████████████████████████▎ | 5498/14940 [01:11<02:01, 77.81patch/s]

37%|████████████████████████████████████████████████████████████▍ | 5507/14940 [01:11<01:58, 79.27patch/s]

37%|████████████████████████████████████████████████████████████▌ | 5516/14940 [01:11<01:57, 80.16patch/s]

37%|████████████████████████████████████████████████████████████▋ | 5525/14940 [01:11<01:56, 81.12patch/s]

37%|████████████████████████████████████████████████████████████▋ | 5534/14940 [01:11<01:54, 81.98patch/s]

37%|████████████████████████████████████████████████████████████▊ | 5543/14940 [01:11<01:53, 82.79patch/s]

37%|████████████████████████████████████████████████████████████▉ | 5552/14940 [01:11<01:54, 81.71patch/s]

37%|█████████████████████████████████████████████████████████████ | 5561/14940 [01:11<01:58, 79.06patch/s]

37%|█████████████████████████████████████████████████████████████▏ | 5569/14940 [01:12<02:12, 70.62patch/s]

37%|█████████████████████████████████████████████████████████████▏ | 5577/14940 [01:12<02:13, 70.28patch/s]

37%|█████████████████████████████████████████████████████████████▎ | 5585/14940 [01:12<02:10, 71.73patch/s]

37%|█████████████████████████████████████████████████████████████▍ | 5594/14940 [01:12<02:04, 75.26patch/s]

38%|█████████████████████████████████████████████████████████████▌ | 5603/14940 [01:12<02:00, 77.60patch/s]

38%|█████████████████████████████████████████████████████████████▌ | 5611/14940 [01:12<02:05, 74.04patch/s]

38%|█████████████████████████████████████████████████████████████▋ | 5619/14940 [01:12<02:03, 75.49patch/s]

38%|█████████████████████████████████████████████████████████████▊ | 5628/14940 [01:12<02:00, 77.42patch/s]

38%|█████████████████████████████████████████████████████████████▉ | 5637/14940 [01:12<01:58, 78.44patch/s]

38%|█████████████████████████████████████████████████████████████▉ | 5646/14940 [01:13<01:56, 79.75patch/s]

38%|██████████████████████████████████████████████████████████████ | 5655/14940 [01:13<01:54, 80.75patch/s]

38%|██████████████████████████████████████████████████████████████▏ | 5664/14940 [01:13<01:54, 81.28patch/s]

38%|██████████████████████████████████████████████████████████████▎ | 5673/14940 [01:13<01:53, 81.75patch/s]

38%|██████████████████████████████████████████████████████████████▎ | 5682/14940 [01:13<01:52, 82.26patch/s]

38%|██████████████████████████████████████████████████████████████▍ | 5691/14940 [01:13<01:54, 81.02patch/s]

38%|██████████████████████████████████████████████████████████████▌ | 5700/14940 [01:13<01:52, 81.79patch/s]

38%|██████████████████████████████████████████████████████████████▋ | 5709/14940 [01:13<01:50, 83.19patch/s]

38%|██████████████████████████████████████████████████████████████▊ | 5718/14940 [01:13<01:49, 84.00patch/s]

38%|██████████████████████████████████████████████████████████████▊ | 5727/14940 [01:14<01:48, 84.62patch/s]

38%|██████████████████████████████████████████████████████████████▉ | 5736/14940 [01:14<01:49, 84.33patch/s]

38%|███████████████████████████████████████████████████████████████ | 5745/14940 [01:14<01:49, 84.15patch/s]

39%|███████████████████████████████████████████████████████████████▏ | 5754/14940 [01:14<01:48, 84.64patch/s]

39%|███████████████████████████████████████████████████████████████▎ | 5763/14940 [01:14<01:47, 85.24patch/s]

39%|███████████████████████████████████████████████████████████████▎ | 5772/14940 [01:14<01:48, 84.30patch/s]

39%|███████████████████████████████████████████████████████████████▍ | 5781/14940 [01:14<01:49, 83.89patch/s]

39%|███████████████████████████████████████████████████████████████▌ | 5790/14940 [01:14<01:49, 83.39patch/s]

39%|███████████████████████████████████████████████████████████████▋ | 5799/14940 [01:14<01:50, 82.57patch/s]

39%|███████████████████████████████████████████████████████████████▊ | 5808/14940 [01:14<01:50, 82.55patch/s]

39%|███████████████████████████████████████████████████████████████▊ | 5817/14940 [01:15<01:56, 78.31patch/s]

39%|███████████████████████████████████████████████████████████████▉ | 5826/14940 [01:15<01:54, 79.28patch/s]

39%|████████████████████████████████████████████████████████████████ | 5835/14940 [01:15<01:53, 80.29patch/s]

39%|████████████████████████████████████████████████████████████████▏ | 5844/14940 [01:15<01:54, 79.18patch/s]

39%|████████████████████████████████████████████████████████████████▏ | 5852/14940 [01:15<01:55, 78.92patch/s]

39%|████████████████████████████████████████████████████████████████▎ | 5860/14940 [01:15<01:59, 75.99patch/s]

39%|████████████████████████████████████████████████████████████████▍ | 5868/14940 [01:15<02:00, 75.19patch/s]

39%|████████████████████████████████████████████████████████████████▌ | 5876/14940 [01:15<02:02, 74.25patch/s]

39%|████████████████████████████████████████████████████████████████▌ | 5885/14940 [01:16<01:57, 76.97patch/s]

39%|████████████████████████████████████████████████████████████████▋ | 5894/14940 [01:16<01:55, 78.38patch/s]

40%|████████████████████████████████████████████████████████████████▊ | 5903/14940 [01:16<02:04, 72.80patch/s]

40%|████████████████████████████████████████████████████████████████▉ | 5912/14940 [01:16<01:59, 75.78patch/s]

40%|████████████████████████████████████████████████████████████████▉ | 5921/14940 [01:16<01:55, 77.78patch/s]

40%|█████████████████████████████████████████████████████████████████ | 5929/14940 [01:16<01:56, 77.46patch/s]

40%|█████████████████████████████████████████████████████████████████▏ | 5938/14940 [01:16<01:54, 78.89patch/s]

40%|█████████████████████████████████████████████████████████████████▎ | 5947/14940 [01:16<01:52, 79.81patch/s]

40%|█████████████████████████████████████████████████████████████████▍ | 5956/14940 [01:16<01:51, 80.81patch/s]

40%|█████████████████████████████████████████████████████████████████▍ | 5965/14940 [01:17<01:50, 81.38patch/s]

40%|█████████████████████████████████████████████████████████████████▌ | 5974/14940 [01:17<01:49, 81.66patch/s]

40%|█████████████████████████████████████████████████████████████████▋ | 5983/14940 [01:17<01:49, 82.09patch/s]

40%|█████████████████████████████████████████████████████████████████▊ | 5992/14940 [01:17<01:48, 82.45patch/s]

40%|█████████████████████████████████████████████████████████████████▊ | 6001/14940 [01:17<01:48, 82.38patch/s]

40%|█████████████████████████████████████████████████████████████████▉ | 6010/14940 [01:17<01:52, 79.69patch/s]

40%|██████████████████████████████████████████████████████████████████ | 6018/14940 [01:17<01:52, 79.58patch/s]

40%|██████████████████████████████████████████████████████████████████▏ | 6027/14940 [01:17<01:50, 80.33patch/s]

40%|██████████████████████████████████████████████████████████████████▎ | 6036/14940 [01:17<01:53, 78.58patch/s]

40%|██████████████████████████████████████████████████████████████████▎ | 6045/14940 [01:18<01:51, 79.47patch/s]

41%|██████████████████████████████████████████████████████████████████▍ | 6053/14940 [01:18<01:52, 79.29patch/s]

41%|██████████████████████████████████████████████████████████████████▌ | 6062/14940 [01:18<01:52, 79.15patch/s]

41%|██████████████████████████████████████████████████████████████████▋ | 6071/14940 [01:18<01:50, 80.41patch/s]

41%|██████████████████████████████████████████████████████████████████▋ | 6080/14940 [01:18<01:48, 81.43patch/s]

41%|██████████████████████████████████████████████████████████████████▊ | 6089/14940 [01:18<01:47, 82.33patch/s]

41%|██████████████████████████████████████████████████████████████████▉ | 6098/14940 [01:18<01:46, 82.89patch/s]

41%|███████████████████████████████████████████████████████████████████ | 6107/14940 [01:18<01:46, 82.86patch/s]

41%|███████████████████████████████████████████████████████████████████▏ | 6116/14940 [01:18<01:47, 82.37patch/s]

41%|███████████████████████████████████████████████████████████████████▏ | 6125/14940 [01:19<01:59, 73.57patch/s]

41%|███████████████████████████████████████████████████████████████████▎ | 6133/14940 [01:19<02:00, 73.33patch/s]

41%|███████████████████████████████████████████████████████████████████▍ | 6141/14940 [01:19<01:59, 73.78patch/s]

41%|███████████████████████████████████████████████████████████████████▍ | 6149/14940 [01:19<01:58, 74.07patch/s]

41%|███████████████████████████████████████████████████████████████████▌ | 6157/14940 [01:19<01:56, 75.43patch/s]

41%|███████████████████████████████████████████████████████████████████▋ | 6165/14940 [01:19<01:56, 75.36patch/s]

41%|███████████████████████████████████████████████████████████████████▊ | 6173/14940 [01:19<01:57, 74.54patch/s]

41%|███████████████████████████████████████████████████████████████████▊ | 6182/14940 [01:19<01:54, 76.40patch/s]

41%|███████████████████████████████████████████████████████████████████▉ | 6191/14940 [01:19<01:51, 78.20patch/s]

41%|████████████████████████████████████████████████████████████████████ | 6200/14940 [01:20<01:49, 79.56patch/s]

42%|████████████████████████████████████████████████████████████████████▏ | 6208/14940 [01:20<01:55, 75.43patch/s]

42%|████████████████████████████████████████████████████████████████████▏ | 6216/14940 [01:20<02:02, 71.19patch/s]

42%|████████████████████████████████████████████████████████████████████▎ | 6224/14940 [01:20<01:59, 72.88patch/s]

42%|████████████████████████████████████████████████████████████████████▍ | 6232/14940 [01:20<01:56, 74.77patch/s]

42%|████████████████████████████████████████████████████████████████████▌ | 6241/14940 [01:20<01:53, 76.56patch/s]

42%|████████████████████████████████████████████████████████████████████▌ | 6250/14940 [01:20<01:51, 78.23patch/s]

42%|████████████████████████████████████████████████████████████████████▋ | 6259/14940 [01:20<01:49, 79.01patch/s]

42%|████████████████████████████████████████████████████████████████████▊ | 6268/14940 [01:20<01:49, 79.45patch/s]

42%|████████████████████████████████████████████████████████████████████▉ | 6277/14940 [01:21<01:48, 79.75patch/s]

42%|█████████████████████████████████████████████████████████████████████ | 6286/14940 [01:21<01:47, 80.50patch/s]

42%|█████████████████████████████████████████████████████████████████████ | 6295/14940 [01:21<01:45, 81.64patch/s]

42%|█████████████████████████████████████████████████████████████████████▏ | 6304/14940 [01:21<01:45, 82.13patch/s]

42%|█████████████████████████████████████████████████████████████████████▎ | 6313/14940 [01:21<01:44, 82.75patch/s]

42%|█████████████████████████████████████████████████████████████████████▍ | 6322/14940 [01:21<01:43, 82.95patch/s]

42%|█████████████████████████████████████████████████████████████████████▍ | 6331/14940 [01:21<01:48, 79.12patch/s]

42%|█████████████████████████████████████████████████████████████████████▌ | 6340/14940 [01:21<01:47, 80.23patch/s]

42%|█████████████████████████████████████████████████████████████████████▋ | 6349/14940 [01:21<01:46, 80.77patch/s]

43%|█████████████████████████████████████████████████████████████████████▊ | 6358/14940 [01:22<01:48, 78.86patch/s]

43%|█████████████████████████████████████████████████████████████████████▉ | 6366/14940 [01:22<01:51, 76.79patch/s]

43%|█████████████████████████████████████████████████████████████████████▉ | 6374/14940 [01:22<01:51, 76.81patch/s]

43%|██████████████████████████████████████████████████████████████████████ | 6382/14940 [01:22<01:50, 77.52patch/s]

43%|██████████████████████████████████████████████████████████████████████▏ | 6391/14940 [01:22<01:48, 78.52patch/s]

43%|██████████████████████████████████████████████████████████████████████▏ | 6399/14940 [01:22<01:49, 78.30patch/s]

43%|██████████████████████████████████████████████████████████████████████▎ | 6408/14940 [01:22<01:46, 80.05patch/s]

43%|██████████████████████████████████████████████████████████████████████▍ | 6417/14940 [01:22<01:46, 79.83patch/s]

43%|██████████████████████████████████████████████████████████████████████▌ | 6425/14940 [01:22<01:49, 77.41patch/s]

43%|██████████████████████████████████████████████████████████████████████▌ | 6433/14940 [01:22<01:50, 76.90patch/s]

43%|██████████████████████████████████████████████████████████████████████▋ | 6441/14940 [01:23<01:55, 73.53patch/s]

43%|██████████████████████████████████████████████████████████████████████▊ | 6449/14940 [01:23<01:57, 72.19patch/s]

43%|██████████████████████████████████████████████████████████████████████▉ | 6458/14940 [01:23<01:53, 74.53patch/s]

43%|██████████████████████████████████████████████████████████████████████▉ | 6466/14940 [01:23<01:52, 75.58patch/s]

43%|███████████████████████████████████████████████████████████████████████ | 6474/14940 [01:23<01:54, 74.10patch/s]

43%|███████████████████████████████████████████████████████████████████████▏ | 6482/14940 [01:23<01:53, 74.48patch/s]

43%|███████████████████████████████████████████████████████████████████████▎ | 6491/14940 [01:23<01:50, 76.56patch/s]

44%|███████████████████████████████████████████████████████████████████████▎ | 6499/14940 [01:23<01:51, 75.40patch/s]

44%|███████████████████████████████████████████████████████████████████████▍ | 6507/14940 [01:23<01:52, 75.20patch/s]

44%|███████████████████████████████████████████████████████████████████████▌ | 6515/14940 [01:24<01:51, 75.89patch/s]

44%|███████████████████████████████████████████████████████████████████████▌ | 6523/14940 [01:24<01:52, 74.83patch/s]

44%|███████████████████████████████████████████████████████████████████████▋ | 6531/14940 [01:24<01:52, 74.69patch/s]

44%|███████████████████████████████████████████████████████████████████████▊ | 6539/14940 [01:24<01:51, 75.35patch/s]

44%|███████████████████████████████████████████████████████████████████████▊ | 6547/14940 [01:24<01:49, 76.56patch/s]

44%|███████████████████████████████████████████████████████████████████████▉ | 6555/14940 [01:24<01:48, 77.34patch/s]

44%|████████████████████████████████████████████████████████████████████████ | 6564/14940 [01:24<01:46, 78.62patch/s]

44%|████████████████████████████████████████████████████████████████████████▏ | 6572/14940 [01:24<01:46, 78.91patch/s]

44%|████████████████████████████████████████████████████████████████████████▏ | 6581/14940 [01:24<01:44, 79.83patch/s]

44%|████████████████████████████████████████████████████████████████████████▎ | 6590/14940 [01:25<01:42, 81.23patch/s]

44%|████████████████████████████████████████████████████████████████████████▍ | 6599/14940 [01:25<01:47, 77.63patch/s]

44%|████████████████████████████████████████████████████████████████████████▌ | 6607/14940 [01:25<01:46, 78.27patch/s]

44%|████████████████████████████████████████████████████████████████████████▋ | 6616/14940 [01:25<01:45, 78.90patch/s]

44%|████████████████████████████████████████████████████████████████████████▋ | 6625/14940 [01:25<01:43, 80.20patch/s]

44%|████████████████████████████████████████████████████████████████████████▊ | 6634/14940 [01:25<01:44, 79.35patch/s]

44%|████████████████████████████████████████████████████████████████████████▉ | 6642/14940 [01:25<01:45, 78.88patch/s]

45%|████████████████████████████████████████████████████████████████████████▉ | 6650/14940 [01:25<01:46, 77.85patch/s]

45%|█████████████████████████████████████████████████████████████████████████ | 6658/14940 [01:25<01:45, 78.17patch/s]

45%|█████████████████████████████████████████████████████████████████████████▏ | 6667/14940 [01:26<01:44, 79.18patch/s]

45%|█████████████████████████████████████████████████████████████████████████▎ | 6675/14940 [01:26<01:50, 75.01patch/s]

45%|█████████████████████████████████████████████████████████████████████████▎ | 6683/14940 [01:26<01:51, 73.97patch/s]

45%|█████████████████████████████████████████████████████████████████████████▍ | 6692/14940 [01:26<01:47, 76.43patch/s]

45%|█████████████████████████████████████████████████████████████████████████▌ | 6701/14940 [01:26<01:45, 78.27patch/s]

45%|█████████████████████████████████████████████████████████████████████████▋ | 6710/14940 [01:26<01:43, 79.37patch/s]

45%|█████████████████████████████████████████████████████████████████████████▊ | 6719/14940 [01:26<01:41, 80.86patch/s]

45%|█████████████████████████████████████████████████████████████████████████▊ | 6728/14940 [01:26<01:40, 82.04patch/s]

45%|█████████████████████████████████████████████████████████████████████████▉ | 6737/14940 [01:26<01:40, 81.89patch/s]

45%|██████████████████████████████████████████████████████████████████████████ | 6746/14940 [01:27<01:40, 81.92patch/s]

45%|██████████████████████████████████████████████████████████████████████████▏ | 6755/14940 [01:27<01:40, 81.54patch/s]

45%|██████████████████████████████████████████████████████████████████████████▎ | 6764/14940 [01:27<01:39, 82.15patch/s]

45%|██████████████████████████████████████████████████████████████████████████▎ | 6773/14940 [01:27<01:38, 82.58patch/s]

45%|██████████████████████████████████████████████████████████████████████████▍ | 6782/14940 [01:27<01:40, 81.18patch/s]

45%|██████████████████████████████████████████████████████████████████████████▌ | 6791/14940 [01:27<01:40, 81.35patch/s]

46%|██████████████████████████████████████████████████████████████████████████▋ | 6800/14940 [01:27<01:40, 80.72patch/s]

46%|██████████████████████████████████████████████████████████████████████████▋ | 6809/14940 [01:27<01:39, 81.46patch/s]

46%|██████████████████████████████████████████████████████████████████████████▊ | 6818/14940 [01:27<01:39, 81.71patch/s]

46%|██████████████████████████████████████████████████████████████████████████▉ | 6827/14940 [01:28<01:38, 82.27patch/s]

46%|███████████████████████████████████████████████████████████████████████████ | 6836/14940 [01:28<01:38, 81.92patch/s]

46%|███████████████████████████████████████████████████████████████████████████▏ | 6845/14940 [01:28<01:39, 81.08patch/s]

46%|███████████████████████████████████████████████████████████████████████████▏ | 6854/14940 [01:28<01:38, 82.30patch/s]

46%|███████████████████████████████████████████████████████████████████████████▎ | 6863/14940 [01:28<01:40, 80.02patch/s]

46%|███████████████████████████████████████████████████████████████████████████▍ | 6872/14940 [01:28<01:45, 76.68patch/s]

46%|███████████████████████████████████████████████████████████████████████████▌ | 6880/14940 [01:28<01:49, 73.84patch/s]

46%|███████████████████████████████████████████████████████████████████████████▌ | 6888/14940 [01:28<01:48, 74.13patch/s]

46%|███████████████████████████████████████████████████████████████████████████▋ | 6896/14940 [01:28<01:46, 75.66patch/s]

46%|███████████████████████████████████████████████████████████████████████████▊ | 6905/14940 [01:29<01:43, 77.34patch/s]

46%|███████████████████████████████████████████████████████████████████████████▉ | 6913/14940 [01:29<01:43, 77.18patch/s]

46%|███████████████████████████████████████████████████████████████████████████▉ | 6921/14940 [01:29<01:50, 72.34patch/s]

46%|████████████████████████████████████████████████████████████████████████████ | 6929/14940 [01:29<01:49, 73.03patch/s]

46%|████████████████████████████████████████████████████████████████████████████▏ | 6937/14940 [01:29<01:47, 74.78patch/s]

46%|████████████████████████████████████████████████████████████████████████████▏ | 6945/14940 [01:29<01:55, 68.96patch/s]

47%|████████████████████████████████████████████████████████████████████████████▎ | 6953/14940 [01:29<01:51, 71.38patch/s]

47%|████████████████████████████████████████████████████████████████████████████▍ | 6961/14940 [01:29<01:49, 72.63patch/s]

47%|████████████████████████████████████████████████████████████████████████████▌ | 6970/14940 [01:29<01:45, 75.33patch/s]

47%|████████████████████████████████████████████████████████████████████████████▌ | 6978/14940 [01:30<01:50, 71.91patch/s]

47%|████████████████████████████████████████████████████████████████████████████▋ | 6986/14940 [01:30<01:57, 67.73patch/s]

47%|████████████████████████████████████████████████████████████████████████████▊ | 6994/14940 [01:30<01:54, 69.39patch/s]