This example demonstrates how to perform representational similarity analysis (RSA) on

EEG data, using a searchlight approach.

In the searchlight approach, representational similarity is computed between the model

and searchlight “patches”. A patch is defined by a seed point (e.g. sensor Pz) and

everything within the given radius (e.g. all sensors within 4 cm. of Pz). Patches are

created for all possible seed points (e.g. all sensors), so you can think of it as a

“searchlight” that moves from seed point to seed point and everything that is in the

spotlight is used in the computation.

The radius of a searchlight can be defined in space, in time, or both. In this example,

our searchlight will have a spatial radius of 4.5 cm. and a temporal radius of 50 ms.

The dataset will be the kiloword dataset : approximately 1,000 words were presented

to 75 participants in a go/no-go lexical decision task while event-related potentials

(ERPs) were recorded.

Authors

Marijn van Vliet <marijn.vanvliet@aalto.fi>

# sphinx_gallery_thumbnail_number=2

# Import required packages

import mne

import mne_rsa

MNE-Python contains a build-in data loader for the kiloword dataset. We use it here to

read it as 960 epochs. Each epoch represents the brain response to a single word,

averaged across all the participants. For this example, we speed up the computation,

at a cost of temporal precision, by downsampling the data from the original 250 Hz. to

100 Hz.

The kiloword datas was erroneously stored with sensor locations given in centimeters

instead of meters. We will fix it now. For your own data, the sensor locations are

likely properly stored in meters, so you can skip this step.

The epochs object contains a .metadata field that contains information about

the 960 words that were used in the experiment. Let’s have a look at the metadata for

10 random words:

epochs.metadata.sample(10)

|

WORD |

Concreteness |

WordFrequency |

OrthographicDistance |

NumberOfLetters |

BigramFrequency |

ConsonantVowelProportion |

VisualComplexity |

| 333 |

grail |

4.368421 |

1.397940 |

1.80 |

5.0 |

583.000000 |

0.600000 |

58.636927 |

| 869 |

morning |

4.450000 |

3.732956 |

1.85 |

7.0 |

962.428571 |

0.714286 |

66.895457 |

| 410 |

despair |

2.400000 |

2.690196 |

2.90 |

7.0 |

517.000000 |

0.571429 |

68.273596 |

| 524 |

gist |

3.050000 |

1.505150 |

1.65 |

4.0 |

798.000000 |

0.750000 |

63.279941 |

| 346 |

spirit |

2.050000 |

3.081707 |

2.05 |

6.0 |

457.500000 |

0.666667 |

54.358230 |

| 145 |

section |

3.800000 |

3.226858 |

1.90 |

7.0 |

592.571429 |

0.571429 |

61.909842 |

| 311 |

flesh |

5.300000 |

2.968950 |

1.80 |

5.0 |

380.600000 |

0.800000 |

61.589660 |

| 402 |

mention |

3.650000 |

2.344392 |

2.40 |

7.0 |

688.857143 |

0.571429 |

65.643653 |

| 103 |

growth |

3.450000 |

3.326541 |

2.35 |

6.0 |

463.500000 |

0.833333 |

71.198055 |

| 833 |

sample |

4.350000 |

2.204120 |

1.75 |

6.0 |

624.833333 |

0.666667 |

73.865260 |

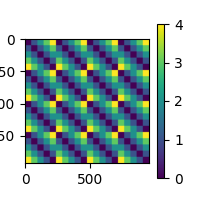

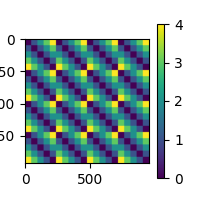

Let’s pick something obvious for this example and build a dissimilarity matrix (RDM)

based on the number of letters in each word.

rdm_vis = mne_rsa.compute_rdm(epochs.metadata[["NumberOfLetters"]], metric="euclidean")

mne_rsa.plot_rdms(rdm_vis)

<Figure size 200x200 with 2 Axes>

The above RDM will serve as our “model” RDM. In this example RSA analysis, we are

going to compare the model RDM against RDMs created from the EEG data. The EEG RDMs

will be created using a “searchlight” pattern. We are using squared Euclidean distance

for our RDM metric, since we only have a few data points in each searchlight patch.

Feel free to play around with other metrics.

rsa_result = mne_rsa.rsa_epochs(

epochs, # The EEG data

rdm_vis, # The model RDM

epochs_rdm_metric="sqeuclidean", # Metric to compute the EEG RDMs

rsa_metric="kendall-tau-a", # Metric to compare model and EEG RDMs

spatial_radius=0.05, # Spatial radius of the searchlight patch in meters.

temporal_radius=0.05, # Temporal radius of the searchlight path in seconds.

tmin=0.15,

tmax=0.25, # To save time, only analyze this time interval

n_jobs=1, # Only use one CPU core. Increase this for more speed.

n_folds=None, # Don't use any cross-validation

verbose=False,

) # Set to True to display a progress bar

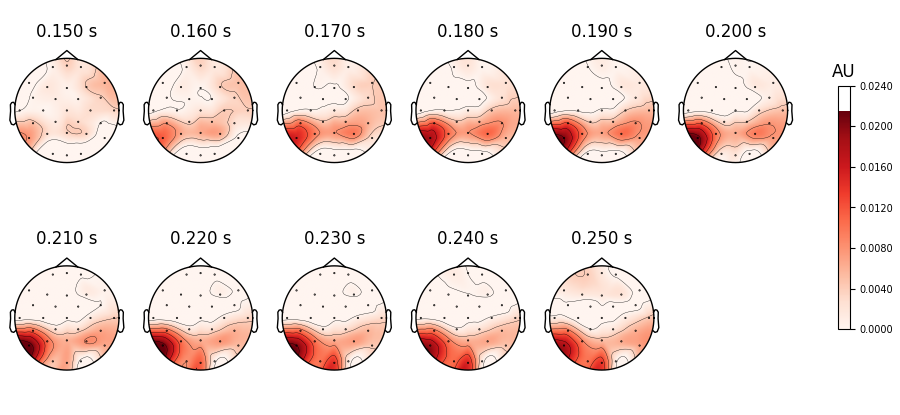

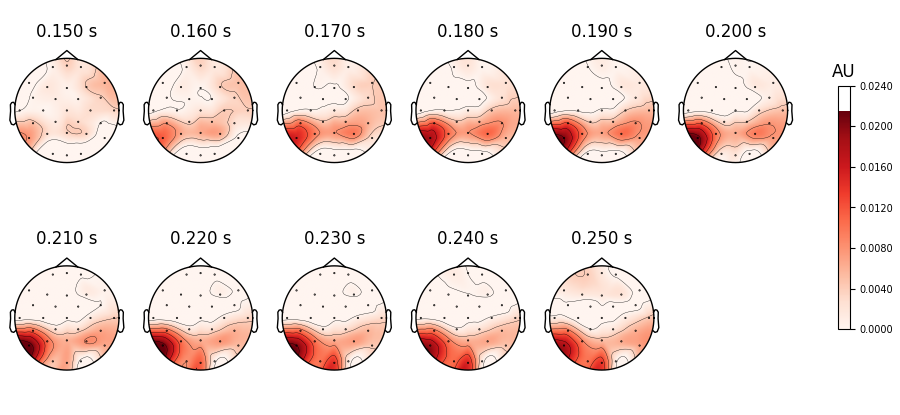

The result is packed inside an MNE-Python mne.Evoked object. This object

defines many plotting functions, for example mne.Evoked.plot_topomap() to look

at the spatial distribution of the RSA values. By default, the signal is assumed to

represent micro-Volts, so we need to explicitly inform the plotting function we are

plotting RSA values and tweak the range of the colormap.

<MNEFigure size 900x415 with 12 Axes>

Unsurprisingly, we get the highest correspondance between number of letters and EEG

signal in areas in the visual word form area.

Total running time of the script: (0 minutes 58.675 seconds)

Gallery generated by Sphinx-Gallery